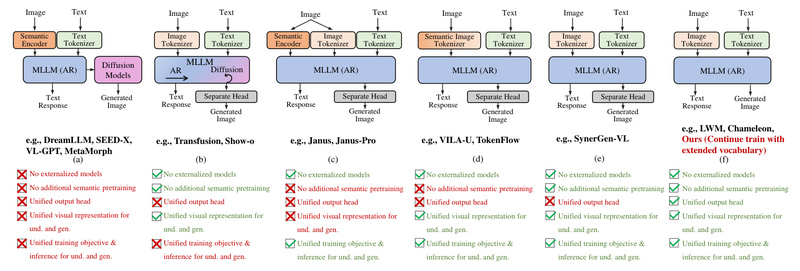

What if a single large language model (LLM) could both understand and generate high-quality images—without relying on external vision encoders like CLIP, and without sacrificing language fluency? That’s exactly what Liquid delivers. Introduced in the paper “Liquid: Language Models are Scalable and Unified Multi-modal Generators,” Liquid reimagines multimodal AI by unifying vision and language within a standard autoregressive LLM architecture. Instead of stitching together separate vision and language models, Liquid tokenizes images into discrete codes and trains them alongside text tokens in a shared embedding space—all within one model.

This approach eliminates the traditional modular pipeline (e.g., CLIP encoder + LLM decoder) that has dominated multimodal systems. The result? A simpler, more scalable architecture where visual comprehension and generation actively reinforce each other, rather than compete for capacity.

Why Liquid Changes the Game for Multimodal AI

No External Vision Models Needed

Most multimodal large language models (MLLMs) depend heavily on pretrained vision backbones like CLIP to interpret images. Liquid removes this dependency entirely. By learning its own visual token embeddings from scratch within the LLM’s vocabulary, it avoids the architectural complexity and potential misalignment between frozen visual features and language reasoning.

This is more than a simplification—it’s a strategic advantage for deployment, maintenance, and reproducibility. You no longer need to manage multiple model checkpoints or worry about compatibility between vision and language components.

Vision and Language Tasks Boost Each Other

In earlier unified models, jointly training on vision and language often led to performance interference: gains in one modality came at the cost of the other. Liquid demonstrates, for the first time, a scaling law: as model size increases (from 0.5B to 32B parameters), this interference vanishes. Larger Liquid models get better at both tasks simultaneously.

This mutual enhancement means that improving image generation quality also sharpens visual understanding—and vice versa. For practitioners, this translates to more consistent, reliable multimodal behavior without fine-tuning separate heads or adapters.

Strong Performance at a Fraction of the Cost

Liquid builds directly on existing open-source LLMs like Qwen2.5 and GEMMA2, leveraging their pre-trained language knowledge as a foundation. The authors report 100x lower training costs compared to training from scratch, while still outperforming specialized models like Chameleon in multimodal tasks.

On image generation, Liquid achieves a FID of 5.47 on MJHQ-30K, surpassing both Stable Diffusion v2.1 and SD-XL. Yet it maintains language performance on par with mainstream LLMs—making it one of the few models that truly excel in both text-only and vision-language settings.

Ideal Use Cases for Technical Decision-Makers

Liquid is particularly compelling for teams building intelligent agents or content-generation systems that require tight coupling between perception and creation. Consider these scenarios:

- AI assistants that explain and illustrate: Answer a user’s query like “How do I make baklava?” not just with text, but by generating a step-by-step visual guide—all within the same model and conversation flow.

- Unified content pipelines: Generate marketing images in diverse aspect ratios and artistic styles (e.g., “young blue dragon with horn lightning in the style of D&D fantasy”) without switching between diffusion models and captioning systems.

- Compact multimodal deployments: Deploy a single Hugging Face–compatible model instead of orchestrating CLIP, an LLM, and a diffusion model—reducing latency, memory footprint, and engineering overhead.

Because Liquid supports variable-resolution image generation in a purely autoregressive manner, it handles arbitrary output sizes naturally—something diffusion models often struggle with without retraining or post-processing.

Getting Started Is Surprisingly Simple

Despite its advanced capabilities, using Liquid requires no exotic dependencies. It’s distributed in standard Hugging Face format and runs with just the transformers library.

Quick Inference Examples

You can run three core tasks with minimal code:

-

Text-to-text dialogue:

python inference_t2t.py --model_path Junfeng5/Liquid_V1_7B --prompt "Write me a poem about Machine Learning."

-

Image understanding:

python inference_i2t.py --model_path Junfeng5/Liquid_V1_7B --image_path samples/baklava.png --prompt 'How to make this pastry?'

-

Text-to-image generation:

python inference_t2i.py --model_path Junfeng5/Liquid_V1_7B --prompt "young blue dragon with horn lightning in the style of dd fantasy full body"

For GPUs with less than 30GB VRAM, simply add --load_8bit to enable 8-bit loading and avoid out-of-memory errors.

A local Gradio demo is also available with just two commands:

pip install gradio==4.44.1 gradio_client==1.3.0 cd evaluation && python app.py

This accessibility lowers the barrier for prototyping, evaluation, and integration into existing LLM-based workflows.

Limitations and Practical Considerations

While promising, Liquid’s current public release has a few constraints to keep in mind:

- Only the Liquid-7B-IT (instruction-tuned 7B model) is fully available today, including checkpoints, evaluation scripts, and demo code. The broader family of models (0.5B to 32B across multiple architectures) is planned but not yet released.

- Image generation is autoregressive, which can be slower than parallel diffusion methods for high-resolution outputs. However, this trade-off enables unified token-level control and arbitrary aspect ratios.

- Like all large generative models, Liquid requires sufficient GPU memory. Users on consumer-grade hardware should plan to use 8-bit quantization for smooth inference.

These are practical considerations—not roadblocks—especially given the model’s simplicity and integration advantages.

Summary

Liquid represents a significant step toward truly unified multimodal intelligence. By collapsing vision and language into a single autoregressive LLM—without external vision encoders, without performance trade-offs at scale, and without massive training costs—it offers a compelling alternative for developers, researchers, and product teams building next-generation AI systems. Whether you’re prototyping a multimodal agent, streamlining a content pipeline, or exploring the frontiers of scalable generative models, Liquid provides a powerful, accessible, and future-proof foundation.