Autoregressive (AR) models have long dominated natural language generation, but applying the same step-by-step prediction approach to images has been challenging. Traditional AR image generators either rely on computationally heavy diffusion models or sacrifice visual fidelity through lossy vector quantization (VQ) that discretizes continuous pixel data. NextStep-1 breaks this trade-off by introducing a unified autoregressive framework that works natively with continuous image tokens, preserving the full richness of visual information while maintaining the simplicity and scalability of language modeling.

Developed by StepFun AI, NextStep-1 is a 14-billion-parameter autoregressive model paired with a lightweight 157-million-parameter flow matching head for image token generation. It jointly processes sequences of discrete text tokens and continuous image tokens using a standard next-token prediction objective—no diffusion, no VQ, no quantization artifacts. The result? State-of-the-art image quality, flexible editing capabilities, and a streamlined architecture that’s both powerful and practical for real-world deployment.

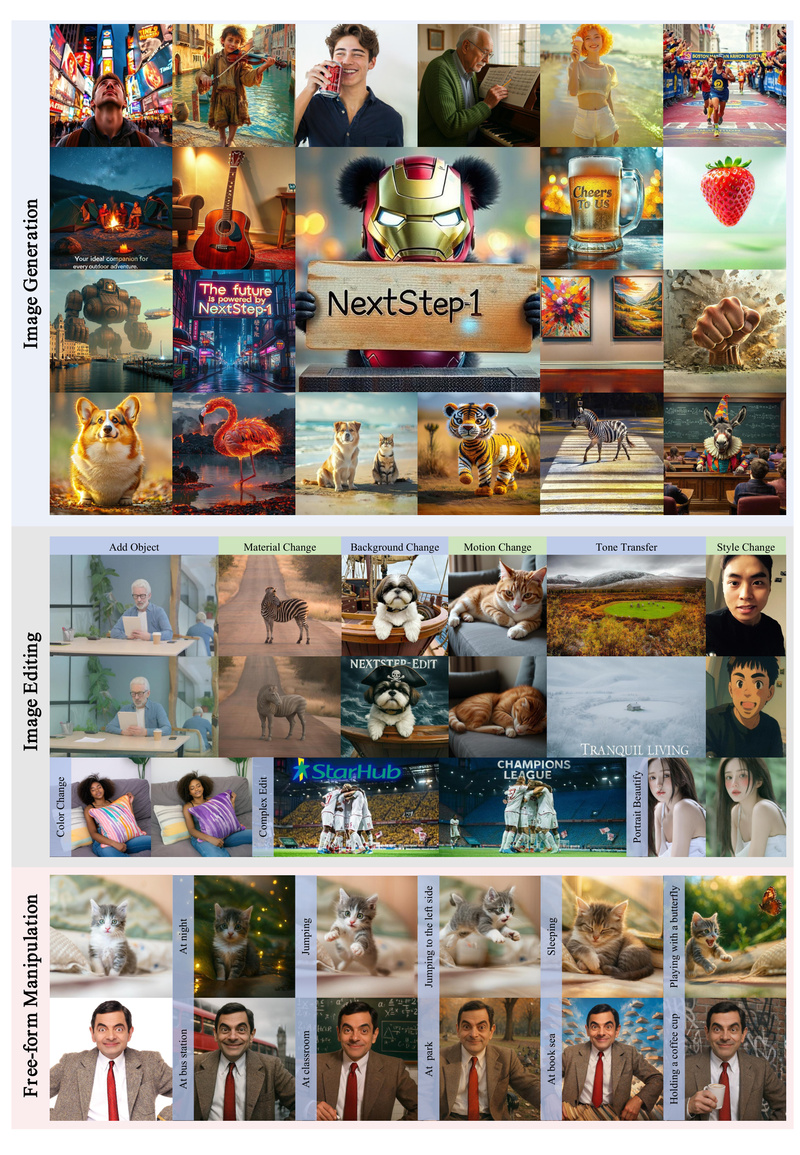

Why NextStep-1 Stands Out

Unified Next-Token Prediction for Text and Vision

Unlike hybrid approaches that stitch together separate modules for language and image generation, NextStep-1 treats both modalities within a single, coherent autoregressive sequence. Text tokens are predicted using a conventional language modeling head, while continuous image tokens are generated via a flow matching head. This design eliminates the need for complex diffusion sampling or error-prone token reconstruction, enabling faster, more stable inference without sacrificing detail.

High-Fidelity Image Synthesis

NextStep-1 achieves leading performance among autoregressive models in text-to-image benchmarks. Its ability to model continuous visual representations directly translates to sharper textures, more accurate colors, and coherent global structures—critical for applications where visual quality impacts user trust or brand perception.

Native Support for Image Editing

Beyond generation, NextStep-1 includes an editing-specialized variant (NextStep-1-Large-Edit) that allows precise modifications to existing images. You can add objects (“Add a pirate hat to the dog’s head”), change backgrounds (“stormy sea with dark clouds”), or insert stylized text—all through natural language prompts. This capability stems from the same unified architecture, demonstrating how continuous tokens enable both creation and controlled alteration within one system.

Simplicity and Scalability

By avoiding diffusion’s iterative denoising and VQ’s codebook bottlenecks, NextStep-1 offers a leaner, more scalable pipeline. Training and inference benefit from standard transformer infrastructure, and the model’s modular design (separate LM and flow heads) allows for efficient optimization. This makes it a compelling choice for teams seeking cutting-edge generative performance without excessive computational overhead.

Ideal Use Cases for Technical Decision-Makers

NextStep-1 is especially well-suited for teams building AI-powered visual applications that demand both quality and flexibility:

- Creative Content Generation: Marketing teams can generate branded visuals (e.g., “a panda wearing an Iron Man mask holding a ‘NextStep-1’ sign”) with cinematic detail, reducing reliance on stock assets or manual design.

- Rapid Prototyping: Product designers can iterate on concept art or UI mockups by describing visual elements in natural language, accelerating early-stage ideation.

- Precision Image Editing: E-commerce or media platforms can automate localized edits—changing backgrounds, adding logos, or updating seasonal themes—without full regeneration or manual Photoshop work.

Because NextStep-1 operates in a purely autoregressive fashion, it integrates cleanly into existing LLM-centric workflows, making it a natural extension for organizations already leveraging transformer-based systems.

Getting Started in Minutes

Although training code and evaluation tooling are not yet public, StepFun AI has released pre-trained model weights and inference code via Hugging Face, enabling immediate experimentation:

- Set up the environment with Python 3.10, PyTorch 2.5.1+cu121, and required dependencies (including optional FlashAttention).

- Download model checkpoints:

stepfun-ai/NextStep-1-f8ch16-Tokenizer(the VAE tokenizer)stepfun-ai/NextStep-1-Large(text-to-image)stepfun-ai/NextStep-1-Large-Edit(image editing)

- Run inference using the provided

NextStepPipeline.

A minimal example for text-to-image generation looks like this:

from nextstep.models.pipeline_nextstep import NextStepPipeline

pipeline = NextStepPipeline( model_name_or_path="stepfun-ai/NextStep-1-Large", vae_name_or_path="/local/path/to/tokenizer" # must be absolute path

).to("cuda", torch.bfloat16)

images = pipeline.generate_image( captions="A baby panda wearing an Iron Man mask...", hw=(512, 512), num_sampling_steps=50, cfg=7.5

)

For image editing, simply pass an input image and an editing instruction (e.g., “Add a pirate hat…”) to the NextStep-1-Large-Edit pipeline.

Note: The VAE tokenizer must be loaded from a local directory—Hugging Face model identifiers won’t work directly in the current inference script. Also, disabling

torch.compile(ENABLE_TORCH_COMPILE=false) is recommended to avoid compatibility issues during initial runs.

Current Limitations and Practical Considerations

While NextStep-1 offers impressive capabilities, prospective adopters should note several constraints:

- Training and evaluation code are not yet released, limiting full reproducibility or fine-tuning. Only inference is currently supported.

- The system depends on a custom VAE tokenizer that must be downloaded separately and referenced via an absolute local path.

- Early versions of the inference code may exhibit instability with

torch.compile; workarounds (like disabling compilation) are documented but add minor friction. - Hardware requirements are non-trivial: a CUDA 12.1 environment with sufficient VRAM (likely 24GB+) is needed to run the 14B model at 512×512 resolution.

These limitations are typical for newly released research systems and are likely to be addressed as the project matures. For teams focused on inference and prototyping, however, the available assets already provide a powerful starting point.

Summary

NextStep-1 redefines what’s possible with autoregressive image generation by replacing diffusion and discrete tokens with a clean, continuous-token architecture. It delivers high-fidelity visuals, native editing support, and architectural simplicity—all within a scalable transformer framework. For technical teams evaluating generative AI solutions, it represents a compelling alternative to diffusion-heavy or quantization-based pipelines, especially when image quality, editability, and integration ease are priorities. With public model weights and working inference code already available, now is an ideal time to explore its potential in your own projects.