In today’s AI landscape, building systems that handle real-world complexity often means stitching together language models, specialized tools, APIs, and domain-specific models—a process that’s both tedious and brittle. Enter OpenAGI, an open-source platform designed to simplify this orchestration by empowering Large Language Models (LLMs) to autonomously select, invoke, and coordinate expert tools in response to natural language instructions. Inspired by how humans combine general reasoning with deep expertise, OpenAGI bridges the gap between versatile LLMs and high-performance domain models, enabling the development of capable, adaptive AI agents for multi-step, real-world tasks.

Developed by researchers and released under the NeurIPS 2023 paper “OpenAGI: When LLM Meets Domain Experts,” this framework is tailored for engineers, researchers, and product teams looking to prototype, deploy, or experiment with modular AI agents—without hardcoding rigid pipelines.

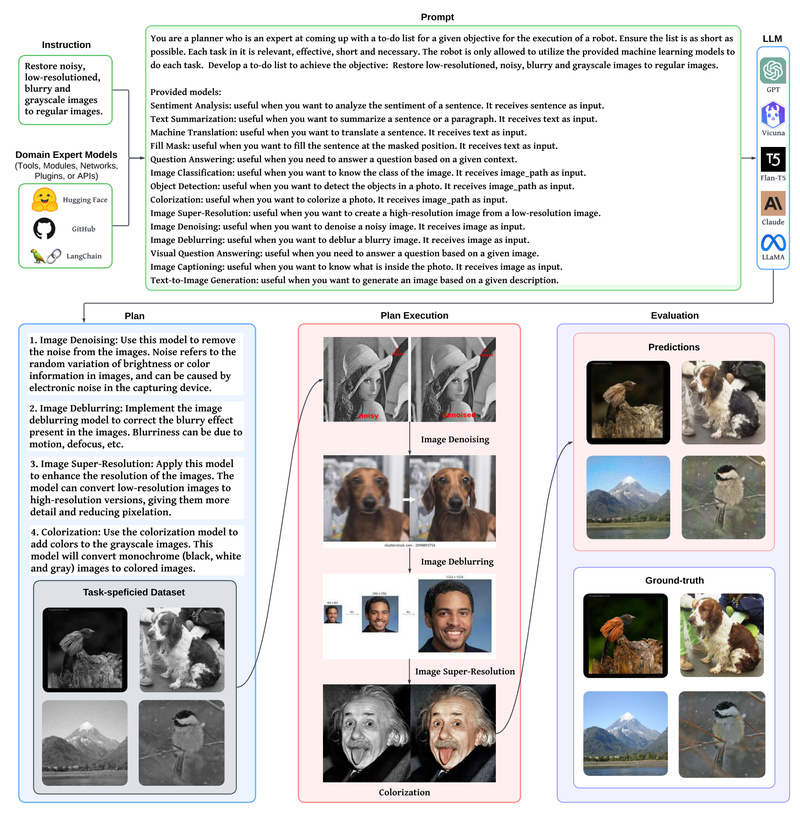

How OpenAGI Works: From Natural Language to Expert Execution

At its core, OpenAGI treats complex tasks as natural language queries. Given a prompt like “Analyze this satellite image, detect urban sprawl, and summarize trends in a report,” the LLM doesn’t try to do everything itself. Instead, it reasons about which external models or tools are needed—say, an image segmentation model, a geospatial analysis API, and a text summarization module—and dynamically chains them together.

This approach mirrors human problem-solving: we rarely rely on a single skill. OpenAGI brings that same compositional intelligence to AI agents by providing a structured yet flexible runtime environment where LLMs act as “task planners” and domain experts handle execution.

Reinforcement Learning from Task Feedback (RLTF)

One of OpenAGI’s most innovative features is its built-in Reinforcement Learning from Task Feedback (RLTF) mechanism. Rather than relying solely on static instruction tuning, OpenAGI uses actual task outcomes—success, partial success, or failure—as signals to refine the LLM’s planning behavior over time. This creates a self-improving loop: the more the agent attempts real tasks, the better it becomes at tool selection and sequencing.

While still research-grade, RLTF represents a practical step toward agents that learn from experience rather than just pretraining data—a critical requirement for robustness in open-world environments.

Practical Use Cases Where OpenAGI Excels

OpenAGI is particularly well-suited for scenarios that demand multi-modal or multi-tool coordination, such as:

- Scientific workflows: Combine literature search (via retrieval APIs), data visualization (via code generation), and domain modeling (e.g., climate or biomedical simulators).

- Enterprise automation: Automate customer support by chaining document understanding, database querying, and response generation.

- Research prototyping: Rapidly test hypotheses about agent architectures by swapping in different expert models without rewriting orchestration logic.

Because tasks are defined in natural language and tool integration is modular, teams can iterate quickly—adding new capabilities just by registering additional expert models or APIs.

Getting Started: A Developer-Centric Design

OpenAGI prioritizes ease of contribution and reuse. Installation is straightforward:

pip install pyopenagi

To create a new agent, developers follow a clear convention:

- Place agent code in

pyopenagi/agents/author_name/agent_name/ - Include three key files:

agent.py: Implements the agent’s execution logicconfig.json: Defines parameters and metadatameta_requirements.txt: Lists Python dependencies

This standardized structure makes agents portable, shareable, and composable. The platform even includes CLI commands to upload your agent to the community registry or download others’ implementations for inspection or reuse:

python pyopenagi/agents/interact.py --mode upload --agent your_name/your_agent python pyopenagi/agents/interact.py --mode download --agent community_author/some_agent

This collaborative model lowers the barrier to building advanced agents—researchers and developers can stand on the shoulders of others’ work rather than starting from scratch.

Limitations and Considerations

While promising, OpenAGI is not a plug-and-play solution for all AI problems. Several practical considerations apply:

- Dependency management: Each agent may require specific external models, APIs, or hardware (e.g., GPU for vision models). Setup can be nontrivial for complex toolchains.

- Platform evolution: The GitHub README notes that for integration with AIOS, users should now migrate to Cerebrum, a newer SDK. This suggests OpenAGI may be transitioning toward a research-focused reference implementation rather than a long-term production framework.

- Expert model quality: The system’s performance hinges on the availability and reliability of domain-specific models. Poor tool outputs will degrade overall task success, regardless of LLM reasoning quality.

That said, these limitations are common in early-stage agent frameworks—and OpenAGI’s open, modular design makes it easier to address them incrementally.

Why OpenAGI Fits Modern AI Development Needs

Traditional AI pipelines often hardcode tool sequences, making them inflexible and hard to maintain. OpenAGI flips this model: instead of baking logic into code, it lets the LLM reason at runtime about how to solve a task using available experts. This shift offers several advantages:

- Reduced orchestration overhead: No need to manually define every possible workflow.

- Future-proof extensibility: New tools can be added without modifying core agent logic.

- Community-driven innovation: Shared agents accelerate collective progress.

- Research transparency: Full access to code, benchmarks, and datasets supports reproducibility—a rarity in proprietary agent platforms.

For teams exploring the frontier of LLM-powered automation, OpenAGI provides both a practical toolkit and a conceptual blueprint for how general and specialized intelligence can coexist.

Summary

OpenAGI stands out as a thoughtfully engineered platform that marries the broad reasoning of LLMs with the precision of domain experts. By enabling natural language tasking, dynamic tool composition, and feedback-driven learning, it addresses key pain points in building real-world AI agents: rigidity, maintenance cost, and limited adaptability. While still evolving, its open-source nature, clear developer workflow, and research-backed foundation make it a compelling choice for anyone prototyping or studying next-generation AI systems.

If your project involves multi-step reasoning across diverse tools—or if you’re simply exploring how LLMs can go beyond chat—OpenAGI offers a structured, community-supported path forward.