In today’s fast-paced software landscape, developers are under constant pressure to write, test, debug, and deploy code faster than ever—often while juggling context-switching, legacy systems, and ambiguous requirements. What if you could delegate parts of this workflow to an AI agent that behaves like a skilled junior engineer: capable of writing code, running shell commands, browsing documentation, and iteratively refining solutions based on real-world feedback?

That’s exactly what OpenHands (formerly OpenDevin) delivers. OpenHands is an open, extensible platform that enables generalist AI agents to act as autonomous software developers. Built on a foundation of large language models (LLMs), it allows agents to interact with real development environments—safely and securely—through code, terminals, and web browsers. Released under the permissive MIT license and backed by a vibrant community of over 188 contributors, OpenHands bridges the gap between experimental AI agents and practical software engineering workflows.

Unlike proprietary coding assistants that operate only within IDEs or chat windows, OpenHands gives agents full agency: they can clone repositories, run tests, inspect outputs, and even file pull requests—all while operating inside sandboxed environments to ensure safety.

Core Capabilities That Mirror Real Developer Workflows

Agents That Think and Act Like Engineers

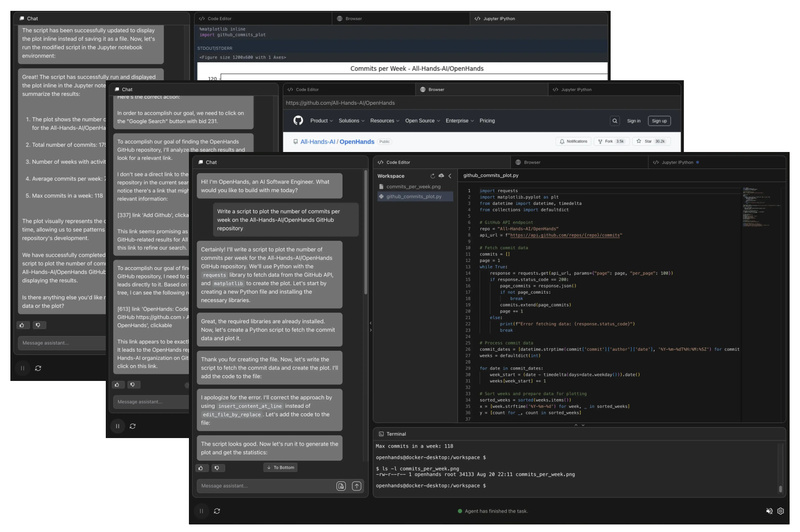

OpenHands agents don’t just generate code snippets—they execute and verify them. Using a composable Python SDK, developers can define custom agent behaviors that combine LLM reasoning with real-world tool use: editing files, invoking build scripts, parsing logs, or navigating web-based documentation. This “act-and-observe” loop mimics how human developers debug and refine software, enabling agents to handle complex, multi-step tasks that static code generators cannot.

Flexible Entry Points for Every Workflow

Whether you’re an individual researcher, a startup CTO, or an engineering team at scale, OpenHands offers multiple interfaces:

- CLI: The fastest way to get started. Plug in your preferred LLM (GPT, Claude, or others), describe a task, and watch the agent work in your terminal—much like working with Claude Code or GitHub Copilot, but with full environmental interaction.

- Local GUI: A React-based single-page app with a REST backend, ideal for interactive agent development on your laptop. Perfect for visualizing agent actions, inspecting file changes, and debugging execution traces.

- OpenHands Cloud: A hosted version with collaboration features—conversation sharing, multi-user support, and integrations with Slack, Jira, and Linear. New users get $10 in free credits via GitHub login.

- Enterprise Deployment: For organizations requiring data sovereignty, OpenHands Enterprise supports self-hosting in private VPCs via Kubernetes, with role-based access control (RBAC) and extended support.

Critically, all core components—the SDK, CLI, and local GUI—are fully open-source under the MIT license, ensuring transparency and long-term sustainability.

Real-World Scenarios Where OpenHands Delivers Value

OpenHands shines in contexts where automation meets ambiguity—precisely the kind of work that bogs down human developers:

- Automating Repetitive Engineering Tasks: From updating dependency versions across microservices to generating boilerplate for new API endpoints, agents can handle tedious, well-scoped chores without human oversight.

- Onboarding and Knowledge Transfer: New team members can ask an OpenHands agent to explain codebases by having it analyze repository structure, run examples, and summarize key patterns—reducing ramp-up time.

- Agent-Based Testing and Validation: Define a task (e.g., “fix this failing test in SWE-Bench”), let the agent propose and validate fixes, and collect metrics on success rates—ideal for benchmarking or continuous integration pipelines.

- Multi-Agent Coordination: Break down complex projects into subtasks assigned to specialized agents (e.g., one for frontend, one for backend), enabling collaborative problem-solving without overloading a single model context.

These use cases directly address common pain points: developer burnout, slow iteration cycles, and the high cognitive load of context switching.

Safety, Benchmarking, and Community Trust

OpenHands prioritizes safe execution. All code runs in sandboxed Docker containers, preventing accidental or malicious system modifications during agent operation—a non-negotiable for production-grade tooling.

The platform also integrates standardized evaluation benchmarks, including SWE-Bench (for real-world GitHub issues) and WebArena (for web-based tasks). In evaluations across 15+ challenging scenarios, OpenHands agents demonstrate measurable performance, providing technical evaluators with empirical data—not just promises.

Moreover, its open governance model fosters rapid innovation: with 2.1K+ contributions and active Slack support, users benefit from community-driven improvements in agent design, evaluation tooling, and deployment patterns.

Getting Started Without Friction

You don’t need deep AI expertise to evaluate OpenHands:

- Install the CLI via pip:

pip install openhands - Configure your LLM API key (OpenAI, Anthropic, or open-source alternatives)

- Run:

openhands --task "Create a Flask app that returns 'Hello, OpenHands!'" - Watch the agent clone, code, test, and serve—all in your local environment.

Comprehensive documentation and responsive community support (via Slack) lower the barrier to experimentation, making it easy to validate OpenHands against your team’s specific needs.

Limitations and Strategic Considerations

While powerful, OpenHands isn’t a magic bullet:

- LLM Dependency: Performance and cost are tied to your chosen LLM provider. Latency and token usage can add up during long agent runs.

- Enterprise Features Are Source-Available, Not Fully Open: Features like RBAC, multi-user collaboration, and Jira/Slack integrations are visible in source code but require a commercial license for long-term use beyond a 30-day trial.

- Not a Replacement for Human Judgment: Agents excel at deterministic, well-scoped tasks but may struggle with ambiguous requirements or architectural decisions requiring deep domain insight.

These constraints are transparent and manageable—especially given the fully open core, which allows teams to audit, extend, or fork as needed.

Summary

OpenHands redefines what’s possible for AI-assisted software development by giving agents the same tools—and responsibilities—as human engineers. Its open architecture, strong safety guarantees, and proven performance on real-world benchmarks make it a compelling choice for technical decision-makers seeking a flexible, community-backed alternative to closed, black-box coding assistants. Whether you’re prototyping an AI dev tool, automating CI workflows, or exploring agent-based software engineering, OpenHands offers a low-risk, high-reward path to experimentation and adoption.