Imagine you need to generate a cohesive set of images—say, a film storyboard, a series of product design mockups, or…

BiRefNet: High-Resolution Binary Image Segmentation with Pixel-Perfect Detail and Cross-Task Generalization 2977

BiRefNet (Bilateral Reference Network) is a state-of-the-art deep learning model designed specifically for high-resolution dichotomous image segmentation (DIS)—a task that…

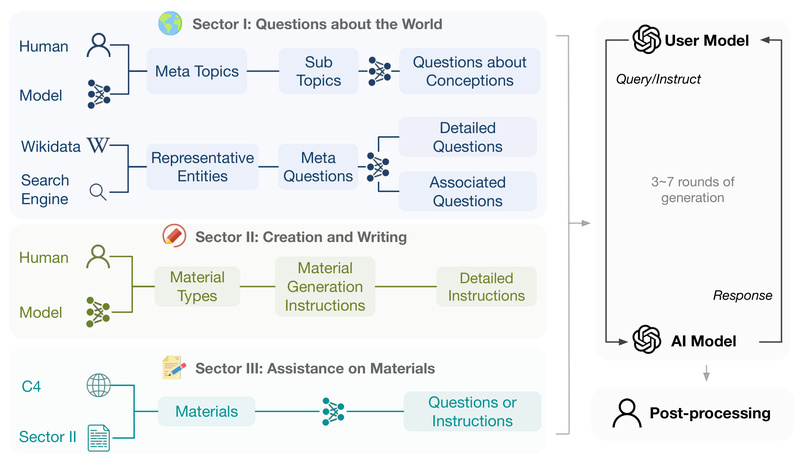

UltraChat: Train Powerful Open-Source Chat Models with 1.5M High-Quality, Privacy-Safe AI Dialogues 2721

If you’re a technical decision-maker evaluating options for building or fine-tuning a conversational AI system, you know that high-quality instruction-following…

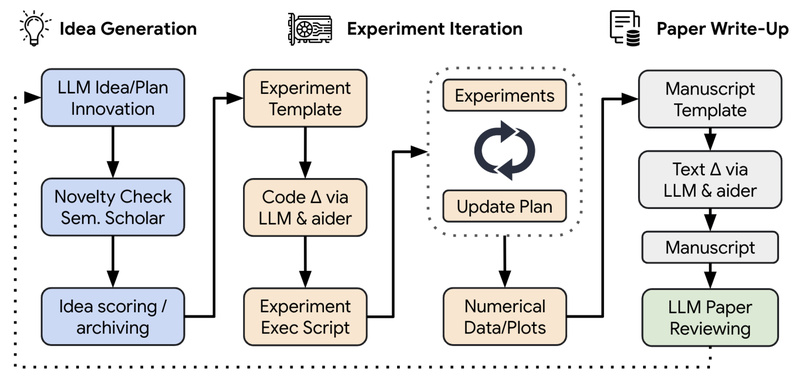

AI-Scientist: Automate End-to-End Machine Learning Research from Idea to Peer-Reviewed Paper 11593

Imagine a system that doesn’t just assist scientists—but acts as one. It generates novel research hypotheses, writes executable code, runs…

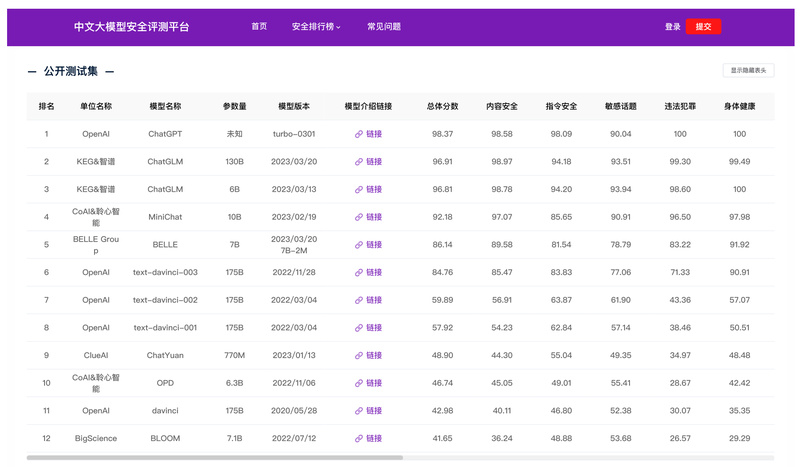

Safety-Prompts: Benchmark and Improve Chinese LLM Safety with 100K Realistic Test Cases 1111

As large language models (LLMs) become increasingly embedded in real-world applications—especially in Chinese-speaking regions—ensuring their safety has never been more…

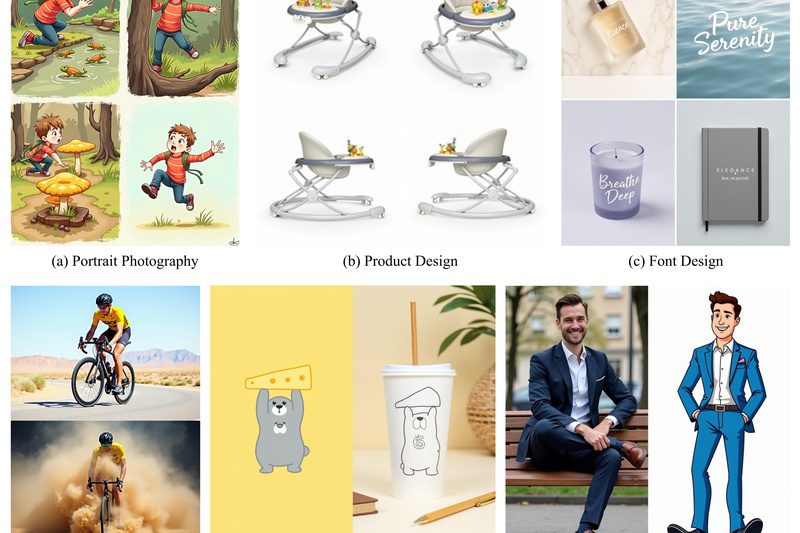

Qwen-Image: Generate and Edit Images with Perfect Text—Even in Chinese 6339

If you’ve ever struggled to generate marketing visuals with legible multilingual text—or tried to edit a product image only to…

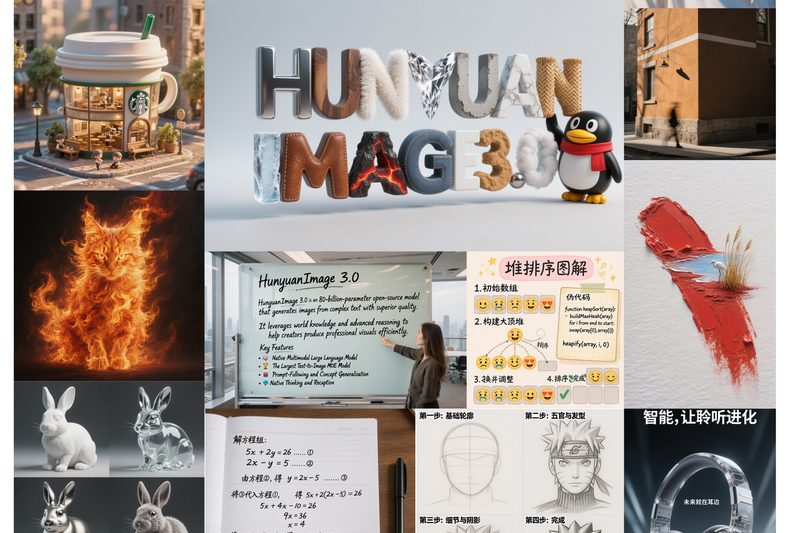

HunyuanImage-3.0: The Largest Open-Source Multimodal Image Generator with Native Reasoning and MoE Architecture 2562

HunyuanImage-3.0 is a groundbreaking open-source image generation model developed by Tencent. Unlike traditional diffusion-based approaches, it builds a native multimodal…

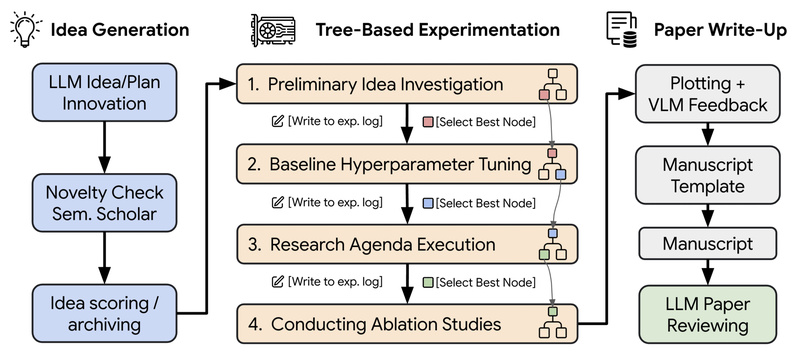

AI-Scientist-v2: Automate End-to-End Scientific Discovery with Agentic Tree Search 1866

In an era where AI is reshaping how knowledge is created, AI-Scientist-v2 emerges as a breakthrough system that autonomously conducts…

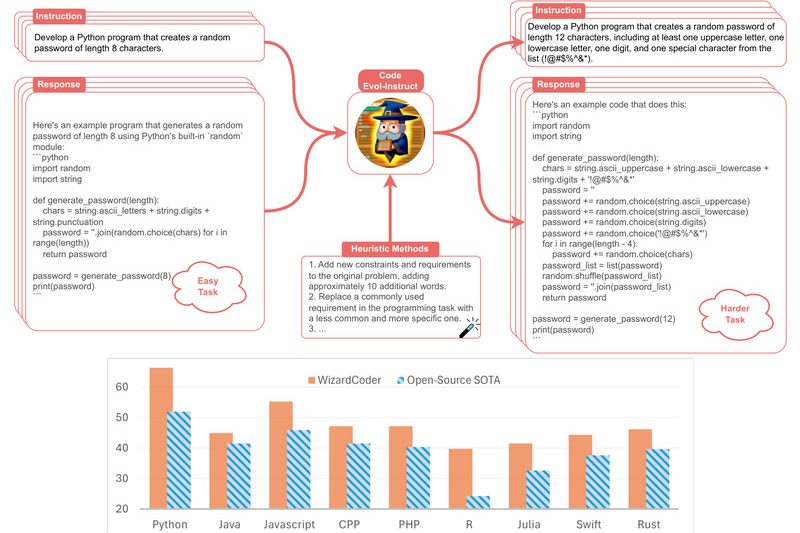

WizardCoder: Open-Source Code LLM That Outperforms ChatGPT and Gemini in Code Generation 9472

WizardCoder is a state-of-the-art open-source Code Large Language Model (Code LLM) that delivers exceptional performance on code generation tasks—often surpassing…

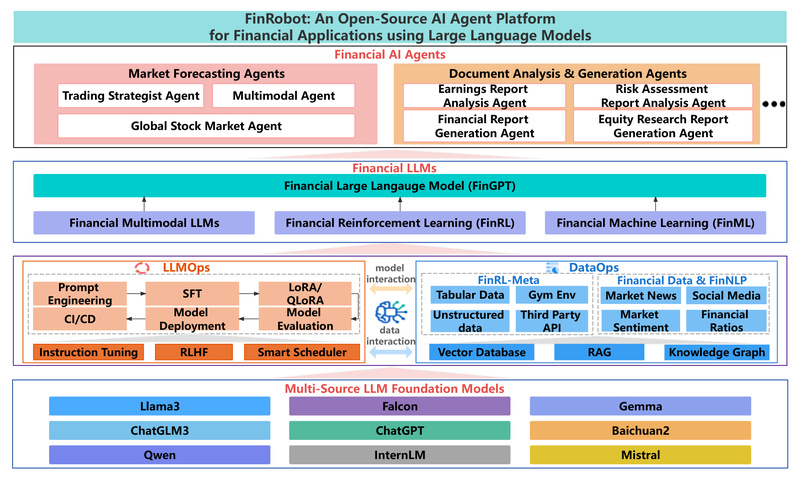

FinRobot: Build Finance-Specific AI Agents That Analyze, Forecast, and Generate Reports—Without Starting from Scratch 4779

In today’s fast-moving financial landscape, professionals and developers alike are eager to harness the power of large language models (LLMs).…