Deploying large language models (LLMs) today often means relying on expensive, centralized infrastructure—specialized GPU clusters, high-bandwidth data centers, and recurring cloud bills. But what if you could run powerful LLMs across a mix of personal laptops, spare servers, or community-contributed devices—without sacrificing performance or breaking the bank?

Enter Parallax: a fully decentralized LLM inference engine that transforms heterogeneous, geographically scattered hardware into a coordinated, high-performance inference platform. Developed by Gradient, Parallax eliminates the need for centralized GPU farms by intelligently distributing model layers and requests across available nodes—even if they vary in capability, location, or network conditions.

Whether you’re a researcher on a tight budget, a developer prioritizing on-device privacy, or part of a distributed team building community AI infrastructure, Parallax offers a practical path to affordable, flexible LLM deployment.

Why Centralized LLM Serving Is a Bottleneck

Traditional LLM inference systems assume uniform, high-end hardware and low-latency interconnects. In reality, many organizations and individuals lack access to such resources. Centralized approaches also introduce privacy concerns, vendor lock-in, and scaling costs that grow linearly (or worse) with usage.

Decentralized alternatives sound appealing—after all, there’s a vast amount of underutilized consumer-grade GPU compute out there. But naive attempts to pool these resources fail due to three key challenges:

- Hardware heterogeneity: Devices differ in GPU memory, compute power, and architecture.

- Network constraints: Bandwidth between nodes is often limited and unpredictable.

- Dynamic availability: Volunteer or edge nodes may go offline or change load suddenly.

Without intelligent coordination, decentralized inference becomes slower, less reliable, and harder to manage than centralized options.

How Parallax Solves the Decentralized Inference Puzzle

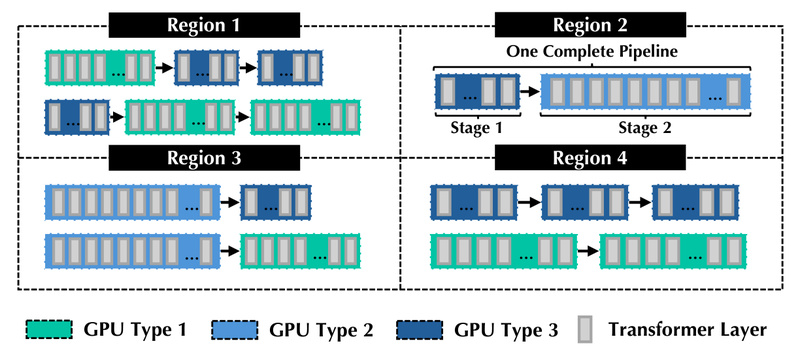

Parallax tackles these challenges through a novel two-phase scheduling architecture that separates long-term planning from real-time adaptation:

1. Model Allocation: Strategic Layer Placement

During setup, Parallax analyzes the available GPU pool and splits an LLM’s layers across nodes in a way that respects:

- Memory limits on each device,

- Bandwidth constraints between nodes,

- Latency and throughput targets.

This “pipeline parallel model sharding” ensures that no single node is overloaded, and communication bottlenecks are minimized from the start.

2. Runtime Pipeline Selection: Adaptive Request Routing

At inference time, Parallax dynamically stitches together execution paths by selecting the best available replica fragments to form end-to-end pipelines. It balances load across nodes and adapts to real-time conditions—like a node slowing down or new requests arriving—ensuring consistent performance even in volatile environments.

This dual-phase approach is what allows Parallax to outperform naive decentralized baselines in both latency and throughput, as demonstrated in real-world deployments across volunteer nodes.

Key Features That Make Parallax Practical

Beyond its core scheduler, Parallax delivers developer-friendly capabilities that lower the barrier to decentralized LLM use:

- Host local LLMs on personal devices: Run models directly on your laptop, desktop, or server without cloud dependency.

- Cross-platform support: Works across Linux, macOS, and Windows environments.

- Optimized backends: Leverages SGLang for GPU acceleration, MLX LM for Apple Silicon (including dynamic KV cache and continuous batching on Mac), and Lattica for peer-to-peer networking.

- Dynamic request scheduling: Routes queries intelligently to maximize resource utilization and minimize wait times.

- Open model compatibility: Officially supports leading open-weight models including Llama 3, Qwen, DeepSeek, MiniMax-M2, GLM-4.6, Kimi-K2, and gpt-oss.

These features make Parallax not just a research prototype, but a usable tool for real projects.

Ideal Use Cases for Parallax

Parallax shines in scenarios where centralized infrastructure is impractical, undesirable, or unaffordable:

- Startups and indie developers seeking to avoid cloud inference costs while maintaining model quality.

- Academic researchers running experiments on volunteer or lab hardware without dedicated GPU clusters.

- Privacy-conscious teams who need on-premise LLM inference to keep sensitive data off third-party servers.

- Community AI initiatives building shared compute pools from donated or repurposed devices.

- Edge deployments in remote or disconnected environments where cloud access is limited.

Because Parallax thrives in heterogeneous, dynamic settings, it’s uniquely suited for the real world—where perfect hardware doesn’t exist.

Getting Started

Parallax is designed for accessibility. Getting up and running involves:

- Installing the Parallax engine (see the official User Guide).

- Selecting a supported open-source model (e.g., Llama 3.1, Qwen3, or MiniMax-M2).

- Configuring your node(s) to join a local or distributed cluster.

The system automatically handles model sharding, peer discovery, and request routing—so you can focus on your application logic, not infrastructure plumbing.

Current Limitations and Considerations

As of its initial release (v0.0.1, October 2025), Parallax is still an early-stage project. Users should consider:

- Model support is limited to open-weight LLMs—proprietary or closed models aren’t compatible.

- Performance depends on node availability and network quality, which can vary in volunteer setups.

- Tooling and documentation are evolving, so some experimentation may be required.

That said, Parallax’s architecture is principled and scalable, making it a promising foundation for decentralized AI infrastructure.

Summary

Parallax reimagines LLM inference for a decentralized world. By intelligently coordinating heterogeneous, geographically dispersed devices, it delivers high performance without centralized GPU clusters—cutting costs, enhancing privacy, and democratizing access to powerful AI. For developers, researchers, and teams seeking an alternative to cloud-dependent LLM serving, Parallax offers a compelling, practical starting point.

If you’re exploring ways to run LLMs on your own hardware or build community-driven AI systems, Parallax is worth evaluating today.