In the era of remote collaboration, virtual meetings have become the norm—making clean, real-time human portrait segmentation essential for professional and engaging video experiences. PP-HumanSeg, developed by PaddlePaddle, is an open-source solution purpose-built to address this exact need. Unlike general-purpose segmentation models trained on diverse scene datasets, PP-HumanSeg is specialized for front-facing human portraits in teleconferencing environments. It leverages a unique Semantic Connectivity-aware Learning (SCL) framework and an ultra-lightweight architecture to deliver high-quality cutouts at remarkable speed—ideal for deployment on everything from mobile phones to edge devices and servers.

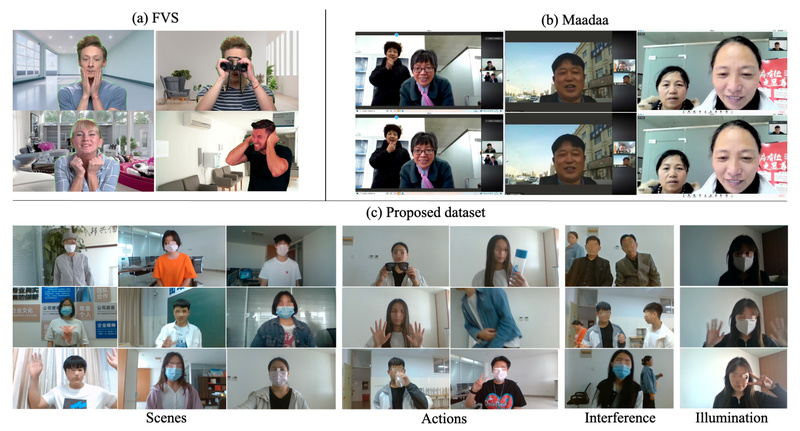

What truly sets PP-HumanSeg apart is its foundation: the PP-HumanSeg14K dataset, the first large-scale video portrait dataset specifically curated from 23 real-world conference scenes, containing 291 videos and over 14,000 fine-labeled frames. This targeted data enables the model to understand common challenges in video conferencing—such as rapid head movements, varying lighting, and partial occlusions—resulting in smoother, more reliable segmentation than off-the-shelf alternatives.

Core Innovations: Speed, Structure, and Simplicity

Semantic Connectivity-aware Learning (SCL)

Traditional segmentation losses (like cross-entropy or Dice) optimize pixel-wise accuracy but often ignore structural coherence—leading to fragmented masks with holes or disconnected limbs. PP-HumanSeg introduces Semantic Connectivity-aware Loss, which explicitly penalizes topological breaks in the predicted human silhouette. This ensures that even under motion blur or complex poses, the output remains a single, connected, and anatomically plausible human mask.

Ultra-Lightweight Design for Real-Time Performance

Balancing accuracy and inference speed is critical for live applications. PP-HumanSeg achieves this through a compact neural architecture optimized for minimal latency. Benchmarks show it delivers best-in-class trade-offs between Intersection-over-Union (IoU) and frames-per-second (FPS), making it suitable for resource-constrained environments like smartphones (e.g., Snapdragon 855) or low-power edge servers.

For instance, variants like PP-HumanSeg-Lite are deployable via PaddleLite, enabling real-time inference directly on ARM CPUs—without requiring cloud offloading or dedicated GPUs.

Ideal Use Cases for Technical Decision-Makers

PP-HumanSeg excels in scenarios where real-time human foreground extraction is required with minimal computational overhead:

- Video conferencing platforms: Replace backgrounds dynamically while preserving hair, glasses, and hand gestures.

- Live streaming tools: Enable creators to overlay virtual sets or blur sensitive environments instantly.

- Mobile productivity apps: Integrate portrait segmentation into selfie editors, AR filters, or remote interview tools.

- Edge AI deployments: Run privacy-preserving segmentation on-device in smart displays, kiosks, or robots.

If your project demands efficient, human-centric segmentation in front-facing camera contexts, PP-HumanSeg offers a production-ready, well-documented path with minimal tuning.

Getting Started: From Repository to Deployment

PP-HumanSeg is part of the broader PaddleSeg toolkit, an end-to-end image segmentation suite by PaddlePaddle. Getting started is streamlined:

- Access the code: Clone from the official repository at github.com/PaddlePaddle/PaddleSeg.

- Use pre-trained models: Download ready-to-use checkpoints like

PP-HumanSegV1orPP-HumanSegV2—trained on the PP-HumanSeg14K dataset. - Deploy flexibly:

- Server GPU: Use Paddle Inference with TensorRT acceleration.

- Mobile CPU: Compile with PaddleLite for Android/iOS.

- Web: Export to ONNX or use Paddle.js for browser-based inference.

- Integrate easily: Leverage simple Python APIs or the PaddleX GUI tool for no-code configuration and model chaining (e.g., combining segmentation with matting for soft edges).

The toolkit also supports major hardware backends—including NVIDIA GPUs, Huawei Ascend, Biren GPUs, and Kunlun chips—ensuring seamless migration across infrastructure.

Limitations and Scope Boundaries

While powerful within its domain, PP-HumanSeg has intentional boundaries:

- Specialized for human portraits: It is not designed for general semantic segmentation (e.g., urban scenes, medical imaging, or multi-object parsing).

- Optimized for single-person, front-facing views: Performance may degrade in crowded scenes, extreme side angles, or non-human subjects.

- Speed-accuracy trade-off: Though highly efficient, it may not match the pixel-level precision of heavyweight models (e.g., HRNet + OCRNet) in non-real-time settings.

Thus, PP-HumanSeg is best chosen when real-time human segmentation in conferencing-like settings is the priority—not when broad scene understanding is required.

Integration Within the PaddleSeg Ecosystem

PP-HumanSeg isn’t a standalone tool—it’s a specialized module within PaddleSeg, which offers 45+ segmentation algorithms and 140+ pre-trained models. This integration allows teams to:

- Combine PP-HumanSeg with PP-Matting for alpha matting (soft edges around hair).

- Extend to interactive segmentation (via RITM or EISeg) for manual refinement.

- Switch to 3D medical segmentation or panoptic segmentation models if project scope evolves.

This modularity ensures you’re never locked into a single capability—scaling from lightweight portrait cutouts to complex vision pipelines as needed.

Summary

PP-HumanSeg solves a precise but widespread problem: delivering fast, structurally coherent human portrait segmentation for real-time communication. Its connectivity-aware learning, teleconferencing-optimized dataset, and ultra-efficient design make it a compelling choice for developers building video apps, edge AI products, or privacy-conscious interfaces. With seamless deployment options and integration into a mature segmentation ecosystem, it lowers the barrier to production-grade human segmentation—without compromising on speed or usability.

For project and technical decision-makers seeking a focused, battle-tested solution for human foreground extraction, PP-HumanSeg offers both performance and practicality out of the box.