Visual-Inertial Navigation Systems (VINS) are critical for applications like drones, robotics, and augmented reality, where precise real-time localization is required without relying on GPS. However, many existing VINS solutions face a fundamental trade-off: high accuracy often comes at the cost of heavy computational demands, making them impractical for embedded or mobile platforms with limited resources.

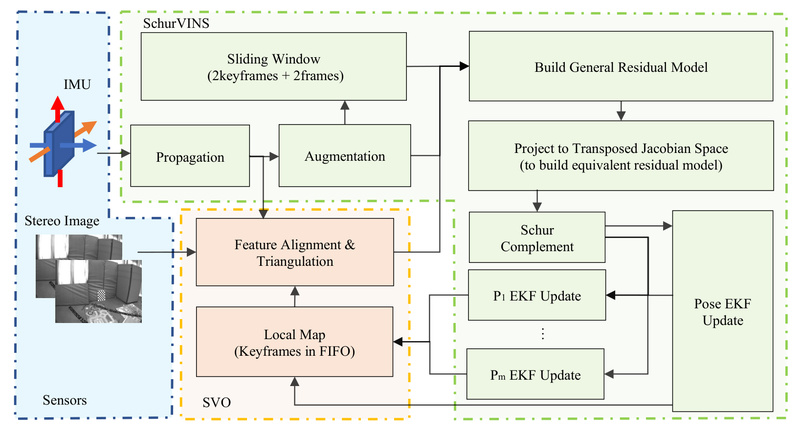

Enter SchurVINS—a lightweight yet highly accurate filter-based VINS framework developed by ByteDance and accepted at CVPR 2024. By integrating a complete residual model with the mathematical efficiency of the Schur complement, SchurVINS delivers state-of-the-art performance in both accuracy and speed. It’s designed specifically for scenarios where computational efficiency and real-time responsiveness are non-negotiable, without sacrificing localization precision.

Why SchurVINS Stands Out

A Complete Residual Model for Higher Accuracy

Unlike traditional EKF-based VINS that simplify observation models to reduce complexity, SchurVINS builds a full residual model that explicitly represents the gradient, Hessian, and observation covariance of visual-inertial measurements. This richer representation captures more geometric and statistical information from sensor data, leading to more accurate state estimation—especially in dynamic or cluttered environments.

Schur Complement for Drastic Computational Savings

The key innovation lies in how SchurVINS processes this high-dimensional model. Using the Schur complement, it decomposes the full optimization problem into two smaller, decoupled subproblems: one for ego-motion (the trajectory of the moving platform) and another for landmark positions (3D features in the environment). This decomposition avoids the need to invert large matrices in real time, significantly reducing computational load while preserving estimation fidelity.

Efficient Dual-Stream EKF Updates

SchurVINS implements Extended Kalman Filter (EKF) updates on both the ego-motion and landmark models simultaneously. This dual-stream architecture not only improves estimation consistency but also enables real-time performance on modest hardware. The result? A system that outperforms existing VINS methods on standard benchmarks like EuRoC and TUM-VI—both in terms of trajectory accuracy and CPU usage.

When to Choose SchurVINS

SchurVINS excels in real-world applications where high-precision pose estimation must coexist with limited compute, memory, or power. Ideal use cases include:

- Autonomous micro-drones that require stable navigation in GPS-denied indoor spaces.

- Mobile robots operating in warehouses or hospitals with strict latency constraints.

- AR/VR headsets needing low-drift 6-DoF tracking without draining battery life.

- Embedded vision systems on edge devices where running full SLAM pipelines is infeasible.

If your project involves stereo cameras paired with an IMU (like the popular EuRoC sensor setup), and you need a VINS that balances robustness, speed, and accuracy—SchurVINS is a compelling choice.

Problems SchurVINS Solves in Practice

Many engineers and researchers face the same dilemma: use a lightweight VINS and accept drift, or deploy a high-accuracy system and risk frame drops or overheating. SchurVINS directly addresses this tension by:

- Eliminating the accuracy-efficiency trade-off: It achieves SOTA accuracy without SOTA computational cost.

- Handling high-dimensional visual data efficiently: By leveraging Schur complement decomposition, it avoids the cubic complexity of standard bundle adjustment or batch optimization.

- Enabling stable real-time operation: Its filter-based design ensures consistent update rates, crucial for control loops in robotics or navigation.

In benchmark tests, SchurVINS consistently delivers lower ATE (Absolute Trajectory Error) than comparable methods while using fewer CPU cycles—making it not just theoretically elegant, but practically superior.

Getting Started with SchurVINS

SchurVINS is built on SVO 2.0 (rpg_svo_pro_open), a mature and widely used visual odometry framework, which smooths the learning curve for those already familiar with ROS-based SLAM systems.

To run SchurVINS:

- Set up a Ubuntu 18.04 environment with ROS Melodic.

- Install required dependencies, including OpenCV, Ceres Solver, and GLEW.

- Clone the repository and use catkin build to compile the workspace.

- Run the provided launch file with a EuRoC dataset (e.g.,

MH_01_easy.bag) to see real-time VIO in action via RViz.

The repository includes clear instructions for building, running, and reproducing results. For reproducibility, note that skipping the first few seconds of certain EuRoC sequences (as specified in the README) helps align results with the paper.

Limitations and Practical Notes

While powerful, SchurVINS has a few constraints to consider:

- OS and ROS dependency: It currently requires Ubuntu 18.04 + ROS Melodic, which may pose challenges for users on newer systems (e.g., Ubuntu 20.04/22.04 or ROS Noetic/Humble). Porting may be necessary for broader compatibility.

- Sensor configuration: It’s optimized for stereo + IMU setups like those in the EuRoC dataset. Monocular or RGB-D configurations are not natively supported.

- Multi-threading variability: Due to CPU scheduling and parallel processing, minor performance fluctuations may occur between runs—though this is common in real-time robotics systems.

Despite these, the project’s solid foundation on SVO 2.0 and its GPLv3 licensing make it a viable candidate for both research and commercial prototyping.

Summary

SchurVINS redefines what’s possible in lightweight visual-inertial navigation. By fusing a complete residual model with the computational elegance of the Schur complement, it delivers exceptional accuracy and efficiency in a single framework. For engineers, researchers, and developers working on resource-constrained autonomous systems, SchurVINS offers a rare combination: you no longer have to choose between precision and performance. With open-source code, strong benchmark results, and a clear integration path via ROS, it’s ready to accelerate your next navigation project—without overloading your hardware.