Solving complex reasoning problems is a persistent challenge for Large Language Models (LLMs). While techniques like Chain-of-Thought (CoT) prompting have helped unlock some of this potential, they often fall short when questions demand deeper exploration or multi-faceted understanding. Enter SQuARE—the Sequential Question Answering Reasoning Engine—a novel prompting strategy that significantly enhances LLM reasoning by encouraging models to interrogate themselves before answering the main query.

Unlike methods that require costly fine-tuning or architectural changes, SQuARE operates purely at the prompting level. It guides LLMs to first generate and resolve a series of auxiliary questions related to the original problem, then synthesize those insights into a final, well-reasoned answer. This self-interrogation process leads to more thorough, accurate, and reliable outputs—especially on complex, knowledge-intensive tasks.

Originally introduced in the paper "SQuARE: Sequential Question Answering Reasoning Engine for Enhanced Chain-of-Thought in Large Language Models", this approach has demonstrated consistent performance gains over standard CoT and rephrase-and-respond baselines across multiple benchmarks, using both Llama 3 and GPT-4o. And because it’s implemented within the open-source RAG-FiT framework, it’s easy to test, adapt, and integrate into existing workflows—without retraining your model.

How SQuARE Works

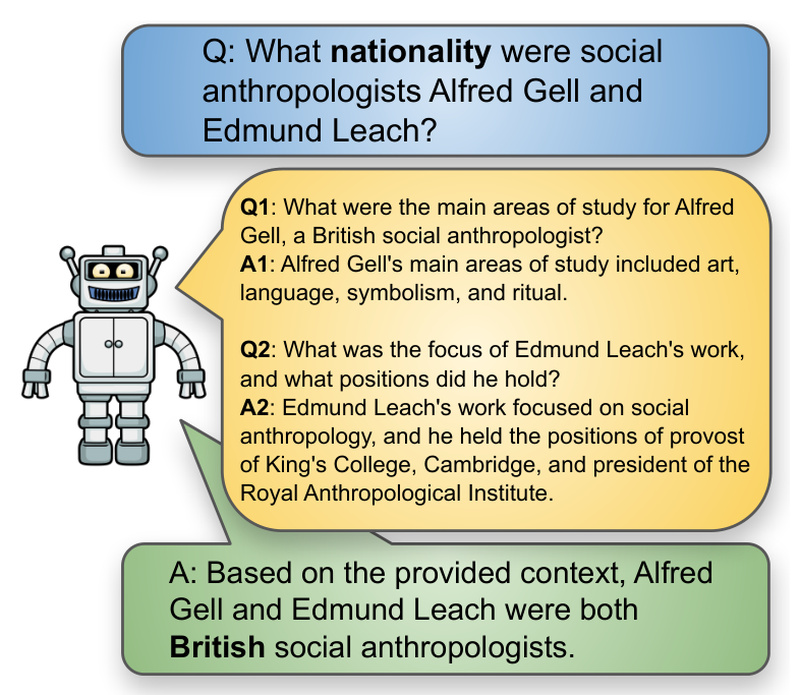

At its core, SQuARE extends the CoT paradigm by adding a structured decomposition phase. Instead of jumping straight into reasoning or generating a single linear thought chain, the model is prompted to:

- Identify key sub-questions that must be answered to resolve the main query.

- Answer each sub-question sequentially, using its own knowledge or context.

- Synthesize all intermediate answers into a coherent final response.

This mimics how humans approach complex problems: by breaking them down, examining each piece, and then reassembling the insights. The result is a more deliberate and comprehensive reasoning path that reduces hallucination and improves factual grounding.

Because SQuARE relies solely on prompt engineering, it’s model-agnostic and compatible with any modern LLM that supports multi-turn or structured prompting. This makes it a low-friction upgrade for teams already using CoT or similar reasoning techniques.

Key Advantages

1. Proven Performance Gains

Rigorous evaluations across diverse question-answering datasets show that SQuARE consistently outperforms traditional CoT and other prompting variants. The gains are especially pronounced on tasks requiring multi-hop reasoning, ambiguity resolution, or domain-specific knowledge.

2. Zero Training Required

SQuARE is a plug-and-play prompting method. You don’t need to fine-tune your model, collect new data, or modify your deployment pipeline. Just swap in the SQuARE prompt template, and you’re ready to go.

3. Systematic Query Decomposition

By forcing the model to articulate implicit assumptions and knowledge gaps through auxiliary questions, SQuARE reduces the risk of shallow or incomplete reasoning. This leads to answers that are not only more accurate but also more interpretable.

4. Open Source and Easy to Use

The implementation is publicly available in the RAG-FiT repository under the square branch. It comes with ready-to-run inference scripts, configuration files (in the CoT folder), and tools to collect and analyze results—making experimentation fast and reproducible.

Ideal Use Cases

SQuARE shines in scenarios where reasoning depth and answer reliability are critical. Consider adopting it if your application involves:

- Technical support systems that must reason through layered user issues.

- Research assistants that synthesize findings from complex scientific questions.

- Educational tools that explain multi-step problem solving (e.g., math, logic, or legal reasoning).

- Enterprise QA pipelines where incorrect or superficial answers carry high risk.

It’s less beneficial for simple, factual lookups (e.g., “What’s the capital of France?”), but becomes increasingly valuable as question complexity grows.

Getting Started

To try SQuARE in your own projects:

- Clone the repository:

git clone https://github.com/IntelLabs/RAG-FiT.git cd RAG-FiT git checkout square

- Install the library:

pip install -e .

- Run inference using the provided configurations:

python inference.py -cp configs/cot -cn square_prompt_config

- Collect and analyze results:

python collect_data.py

No fine-tuning is needed for basic usage—just run inference with the SQuARE prompt structure. Advanced users can also integrate it into RAG-FiT’s full training and evaluation pipeline if they wish to combine it with retrieval-augmented strategies.

Limitations and Considerations

While powerful, SQuARE is not a silver bullet:

- It is a prompting technique, not a model. Its effectiveness depends on the base LLM’s inherent reasoning and knowledge capabilities.

- The multi-step process can increase latency and token usage, which may matter in real-time or cost-sensitive applications.

- Benefits are most evident on complex questions; for simple queries, the overhead may not be justified.

- Although hosted in the RAG-FiT framework—a toolkit for retrieval-augmented fine-tuning—SQuARE itself does not involve retrieval. It focuses purely on internal reasoning enhancement.

Summary

SQuARE offers a simple yet powerful way to elevate LLM reasoning without retraining or infrastructure changes. By prompting models to ask and answer their own guiding questions, it fosters deeper, more structured thinking that translates into better answers on complex tasks. With open-source code, clear documentation, and demonstrated gains across leading models, it’s a compelling addition to any technical team’s prompting toolkit—especially those working on knowledge-intensive applications where reliability matters.

If you’re already using Chain-of-Thought and looking for your next upgrade, SQuARE is worth a serious look.