Overview

Step1X-Edit is a state-of-the-art open-source framework for general-purpose image editing that delivers performance comparable to leading proprietary models like GPT-4o and Gemini2 Flash—without requiring access to closed APIs or black-box systems. Built on a unified multimodal architecture, it combines a powerful multimodal large language model (MLLM) for understanding user instructions with a high-fidelity diffusion decoder to produce accurate, visually coherent edits.

What truly sets Step1X-Edit apart is its grounding in real-world usage. Unlike many research prototypes trained on synthetic or overly simplistic prompts, Step1X-Edit was developed using a high-quality dataset derived from authentic user editing requests and evaluated on GEdit-Bench, a novel benchmark explicitly designed to reflect genuine, diverse, and complex editing scenarios. This ensures the model excels not just in controlled lab settings, but in practical applications—from e-commerce photo retouching to creative design automation and content moderation workflows.

For developers, researchers, and AI practitioners, Step1X-Edit offers unprecedented accessibility: full model weights, training scripts, evaluation tools, and ecosystem integrations are all publicly available under the Apache 2.0 license. Whether you’re prototyping a custom image editor, fine-tuning for domain-specific tasks (like anime character refinement), or integrating editing capabilities into a larger multimodal pipeline, Step1X-Edit provides the flexibility, performance, and transparency needed to move fast—without vendor lock-in.

Why Step1X-Edit Closes the Gap with Proprietary Systems

Until recently, the most capable image editing models were exclusively available through closed commercial APIs. While models like GPT-4o demonstrated impressive instruction-following and visual reasoning, they offered no control, limited customization, and zero insight into internal behavior. Step1X-Edit directly addresses this gap by delivering near-parity performance in an open, inspectable, and modifiable framework.

Benchmarks confirm this claim. On GEdit-Bench—a rigorously curated evaluation suite scored by both GPT-4 and Qwen2.5-VL—Step1X-Edit consistently outperforms all existing open-source baselines and approaches the scores of leading proprietary systems across key dimensions:

- Semantic Correctness (SC): Does the edit accurately reflect the instruction?

- Perceptual Quality (PQ): Is the output visually plausible and artifact-free?

- Overall (O): Holistic human-like judgment of the edit.

For example, Step1X-Edit v1.1 achieves a 7.65 (Q_SC) and 7.35 (Q_O) on GEdit-Bench, while the latest Step1x-edit-v1p2-preview pushes these scores even higher—proving that open-source innovation can rival even the best commercial offerings.

Real-World Editing Grounded in Authentic User Needs

Many image editing models fail on practical tasks because they’re trained on idealized or templated prompts (“add sunglasses to person”). Step1X-Edit avoids this pitfall by leveraging a real-user-driven data generation pipeline that captures the ambiguity, specificity, and complexity of actual editing requests—such as “make her necklace look more luxurious by adding a ruby pendant” or “change the background to a rainy Tokyo street at night.”

This real-world grounding is formalized in GEdit-Bench, which evaluates models across hundreds of diverse, naturally phrased instructions spanning object insertion, attribute modification, scene transformation, and contextual reasoning. The result? A model that doesn’t just “follow prompts,” but understands intent—a critical distinction for production use cases.

Developer-Friendly Features and Ecosystem Integrations

Step1X-Edit is designed for real-world deployment, not just research demos. Key developer-centric features include:

Multi-Modal Editing + Text-to-Image Generation

Starting with v1.1, Step1X-Edit supports not only reference-based editing but also text-to-image (T2I) generation, making it a unified foundation for both creation and modification.

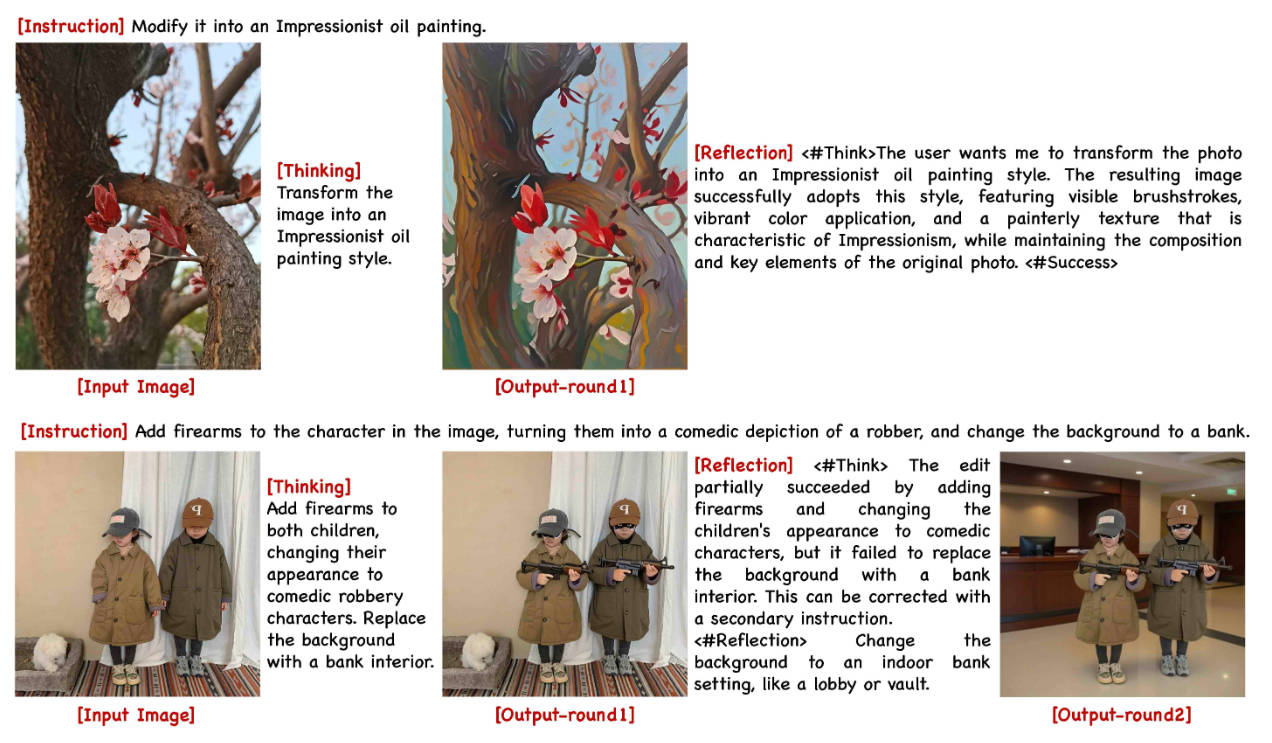

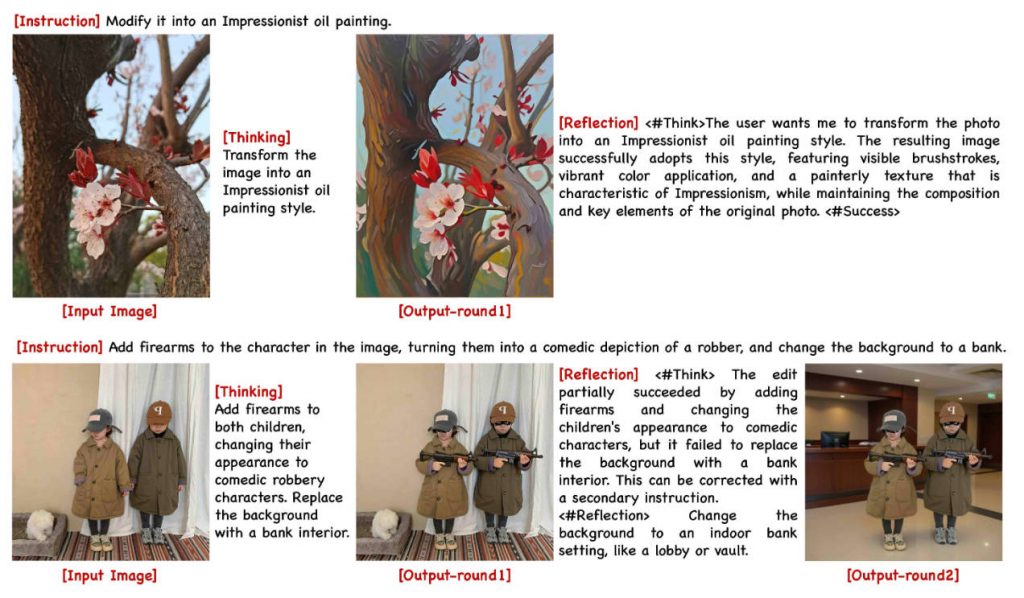

Reasoning and Reflection for Complex Edits (v1p2-preview)

The latest preview version introduces a native reasoning-edit architecture that decouples instruction interpretation from image generation. By enabling “thinking” and “reflection” modes, the model can:

- Reformat vague instructions into precise, executable steps

- Self-correct errors during or after generation

- Handle multi-step edits requiring conceptual or procedural knowledge

On the KRIS-Bench knowledge reasoning benchmark, the thinking + reflection variant achieves 62.94 in factual knowledge and 61.82 in conceptual knowledge—significant improvements over earlier versions.

Broad Ecosystem Compatibility

Step1X-Edit integrates seamlessly with popular AI tooling:

- Diffusers: Official pipeline support via custom branches (e.g.,

Step1XEditPipelineV1P2) - ComfyUI: Community-built plugins enable drag-and-drop workflow integration

- Gradio: One-click local demo for rapid prototyping

- LoRA Fine-tuning: Customize the model on a single 24GB GPU (e.g., for anime hand correction)

- FP8 Quantization & CPU Offloading: Reduce VRAM usage by over 50%

Solving Key Pain Points in Image Editing

Step1X-Edit directly tackles three persistent challenges in the field:

- Poor Instruction Following: Many models ignore or misinterpret nuanced edits. Step1X-Edit’s MLLM backbone excels at parsing complex, natural-language instructions.

- Low Edit Fidelity: Outputs often suffer from artifacts, semantic mismatches, or style inconsistencies. The diffusion decoder ensures photorealistic, spatially coherent results.

- Lack of Open Alternatives: Until now, high-quality editing required closed APIs. Step1X-Edit provides full transparency and control—ideal for privacy-sensitive or regulated applications.

By unifying perception, reasoning, and generation in one framework, Step1X-Edit eliminates the need for brittle multi-model pipelines or manual post-processing.

Getting Started: Practical Entry Points

You don’t need a data center to use Step1X-Edit. The project offers multiple on-ramps:

- Try Online: Use the official Gradio demo to test edits instantly

- Run Locally: Execute

bash scripts/run_examples.shwith pre-downloaded weights - Reduce VRAM: Enable

--quantized(FP8) or--offloadflags to run on 18–25GB GPUs - Fine-tune Custom Behavior: Use the provided LoRA scripts to adapt the model to niche domains (e.g., medical imaging, fashion design) with minimal resources

For enterprise or research deployments, advanced optimizations like TeaCache (caching attention keys/values) and xDiT (multi-GPU sequence parallelism) enable high-throughput inference—even at 1024px resolution.

Hardware and Performance Trade-offs

While the full-precision model requires ~49.8GB VRAM at 1024px resolution, practical optimizations dramatically lower the barrier:

| Configuration | Peak VRAM (1024px) | Inference Time (28 steps) |

|---|---|---|

| Full Precision | 49.8 GB | 22s |

| FP8 Quantized | 34 GB | 25s |

| FP8 + CPU Offload | 18 GB | 51s |

| FP8 + xDiT (4 GPUs) + TeaCache | 54.2 GB | 5.82s |

This flexibility allows users to balance speed, quality, and hardware constraints—whether running on a single consumer GPU or a multi-node cluster.

When (and When Not) to Use Step1X-Edit

Ideal for:

- Building custom image editing SaaS tools

- Automating design workflows (e.g., ad personalization, product mockups)

- Research on instruction-based visual generation

- Fine-tuning for specialized domains (e.g., anime, medical visuals)

Current limitations:

- Batch size is effectively 1 in standard inference mode

- High-resolution editing still demands significant VRAM without quantization/offloading

- Reasoning/reflection modes (v1p2-preview) are experimental and may increase latency

Nonetheless, Step1X-Edit represents one of the most capable and accessible open-source image editing frameworks available today—bridging the gap between academic innovation and industrial applicability.

Summary

Step1X-Edit democratizes high-fidelity, instruction-driven image editing by matching the performance of leading proprietary models while remaining fully open-source, transparent, and extensible. Grounded in real user needs, equipped with developer-friendly tooling, and optimized for diverse hardware setups, it empowers teams to build, customize, and deploy advanced editing capabilities without reliance on closed APIs. For anyone seeking a practical, powerful, and production-ready image editing solution, Step1X-Edit is a compelling choice.