Creating visually coherent sequences of images or videos from text prompts has long been a bottleneck in AI-powered storytelling. While modern diffusion models excel at generating single high-quality images, they often fail to maintain character identity, object consistency, or scene continuity across multiple frames—especially over long sequences. This inconsistency severely limits their practical use in applications like comic creation, film storyboarding, or animated narrative generation.

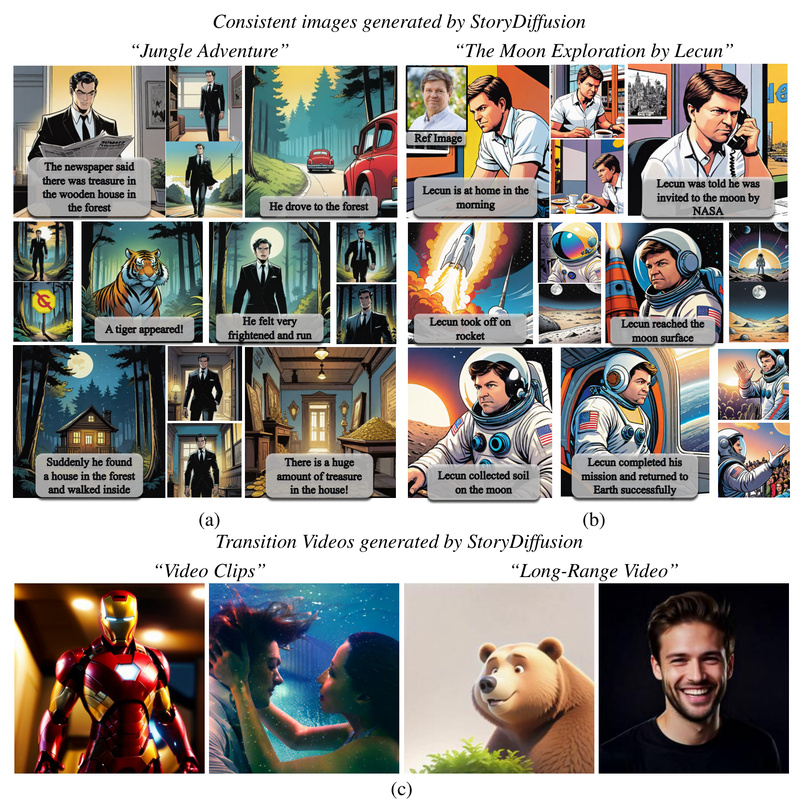

Enter StoryDiffusion, an open-source framework that directly tackles this challenge. By introducing a novel architectural modification called Consistent Self-Attention, StoryDiffusion enables existing text-to-image diffusion models—such as Stable Diffusion 1.5 and SDXL—to generate sequences of images with remarkably stable subjects and details, without any fine-tuning or retraining. Moreover, it extends this capability to long-range video generation through a Semantic Motion Predictor that operates in a compressed image semantic space, enabling smooth, temporally coherent transitions between keyframes.

Whether you’re a researcher exploring narrative AI, a developer building creative tools, or a digital artist prototyping visual stories, StoryDiffusion offers a zero-shot, plug-and-play solution to one of generative AI’s most persistent pain points: visual inconsistency across time or panels.

Why Visual Consistency Matters in Generative Storytelling

Traditional diffusion models process each prompt independently. Even with identical character descriptions (“a red-haired elf in blue robes”), minor stochastic variations during generation can result in drastically different appearances across frames—changing facial features, clothing, or even species. This makes them unreliable for any application requiring continuity, such as:

- Multi-panel comics where characters must look the same across scenes

- Film pre-visualization where camera moves reveal the same environment

- Game concept art sequences showing character progression

Without architectural or procedural intervention, achieving consistency typically requires expensive fine-tuning (e.g., DreamBooth) or complex prompt engineering—neither scalable nor accessible to most users. StoryDiffusion changes this by operating directly on the attention mechanism, the neural “glue” that binds visual elements together.

Core Innovations That Make StoryDiffusion Work

Consistent Self-Attention: Hot-Swappable Consistency for Any SD Model

At the heart of StoryDiffusion is Consistent Self-Attention, a new way of computing attention during the denoising process. Instead of allowing each image in a sequence to attend only to itself, the method shares cross-frame attention keys and values across all images being generated simultaneously. This forces the model to align visual features—like facial structure, pose, or object shape—across the entire batch.

Critically, this module is hot-pluggable: it integrates into existing Stable Diffusion pipelines with minimal code changes and requires no retraining. Users simply provide 3–6 related text prompts (e.g., describing sequential story beats), and StoryDiffusion ensures the generated images share consistent visual semantics. Empirical results show dramatic improvements in character and detail stability compared to vanilla SDXL or SD1.5.

Semantic Motion Predictor: Long Video Generation Beyond Latent Interpolation

For video, most existing methods rely on interpolating latent vectors between two frames. While fast, this often causes flickering, warping, or identity drift—especially over long durations.

StoryDiffusion introduces a Semantic Motion Predictor that estimates motion not in latent space, but in a higher-level semantic image representation. Trained to predict how visual semantics shift between two condition images, this module generates smooth, plausible motion trajectories that preserve subject identity and scene integrity. This enables two-stage long video generation: first, produce a sequence of consistent keyframes using Consistent Self-Attention; then, animate them into a temporally stable video using semantic motion prediction.

Practical Use Cases Where StoryDiffusion Excels

- AI-Assisted Comic Creation: Generate full comic strips where characters retain consistent appearance across panels, based solely on narrative prompts.

- Film and Animation Previs: Rapidly prototype storyboards with visual continuity, ideal for early-stage concept testing.

- Game Narrative Prototyping: Visualize character arcs or quest sequences with stable asset representation.

- Long-Form Video Storytelling: Create multi-scene AI-generated videos (e.g., short films or ads) where subjects remain recognizable throughout.

Because it works out-of-the-box with popular models, adoption requires no ML expertise—just clear prompts and a compatible GPU.

Getting Started: Simple, Low-VRAM, and Open Source

StoryDiffusion is designed for accessibility:

- Minimal setup: Install via standard Python/Conda environment with PyTorch ≥ 2.0.

- Low VRAM support: A dedicated

gradio_app_sdxl_specific_id_low_vram.pyscript enables usage on GPUs with ≥20GB memory (e.g., A10). - Jupyter and Gradio interfaces: Choose between notebook-based exploration or a user-friendly web demo.

- Prompt guidance: For best results, provide at least 3–6 sequential text prompts describing your story’s progression.

While the video generation model weights and full training code are still pending release, the comic/image generation module is fully available and ready for integration.

Current Limitations and Future Roadmap

As of now, users should note:

- The Semantic Motion Predictor’s source code and pretrained weights are not yet public, limiting full end-to-end video generation to inference with precomputed models.

- Consistent generation requires multiple input prompts, making it less suited for single-image tasks.

- Performance scales with prompt quality—vague or disjointed prompts reduce consistency.

However, the team has already released core comic generation capabilities, with video components expected soon. The modular design also invites community extension to other diffusion backbones.

Summary

StoryDiffusion solves a fundamental problem in generative AI: maintaining visual consistency across time and frames without costly retraining. By rethinking self-attention and motion modeling, it enables zero-shot, long-range visual storytelling with existing diffusion models. For creators, researchers, and developers seeking reliable, coherent image sequences or videos from text, it represents a significant leap forward in practical, deployable AI storytelling tools. With its open-source nature, low-barrier entry, and strong empirical results, StoryDiffusion is well-positioned to become a go-to framework for narrative generation in the diffusion era.