Generating high-quality images from text prompts has become remarkably powerful thanks to diffusion models like Stable Diffusion. Yet, for many practical applications—such as visual design, prototyping, or creative direction—text alone often falls short. It’s hard to precisely specify object layout, spatial relationships, or visual style using words alone, especially when dealing with complex scenes.

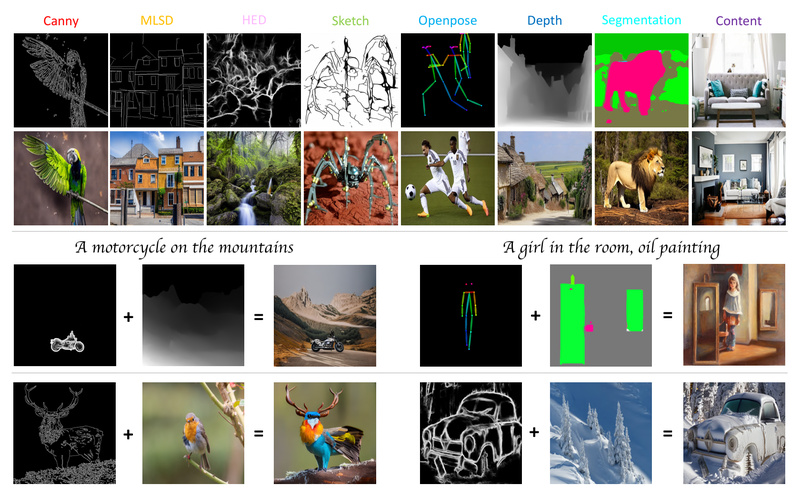

Uni-ControlNet addresses this gap by offering a single, unified framework that lets you guide image generation using multiple visual conditions simultaneously—like edge maps, depth estimates, segmentation masks, sketches, or even global reference images—without retraining the entire base model. Built on top of Stable Diffusion v1.5, it introduces just two lightweight, composable adapters to handle any combination of local and global controls. This design drastically cuts down training costs, model size, and deployment complexity while significantly boosting controllability.

Why Existing Approaches Fall Short

Traditional text-to-image models rely solely on natural language prompts, which struggle to encode fine-grained spatial or structural information. While earlier control methods like ControlNet made progress by conditioning diffusion models on single visual signals (e.g., Canny edges), they require a separate trained model for each control type. Want to combine pose, depth, and segmentation? That means managing multiple large models, joint training, and complex orchestration—hardly practical for real-world workflows.

Worse, most of these approaches demand full fine-tuning or large adapter sets that scale linearly with the number of conditions, making them expensive to train and hard to maintain.

Uni-ControlNet’s Core Innovation: Two Adapters to Rule Them All

Uni-ControlNet rethinks this paradigm. Instead of one adapter per condition, it uses only two universal adapters:

- Local Control Adapter: Handles pixel-aligned conditions like edges (Canny, HED, MLSD), human poses (OpenPose), depth maps (Midas), or semantic segmentations.

- Global Control Adapter: Processes holistic image-level signals, such as CLIP-based content or style embeddings from a reference image.

Crucially, the number of adapters stays fixed at two, no matter how many control signals you combine. Want to use five local conditions and two global ones? Still just two adapters. This design enables composability by construction—you can mix and match controls freely at inference time.

Even better, both adapters are trained separately on a frozen Stable Diffusion backbone. There’s no need for joint training or retraining the entire diffusion model, which saves GPU hours, reduces storage overhead, and simplifies the development pipeline.

Real-World Use Cases Where Uni-ControlNet Shines

Uni-ControlNet excels in scenarios where precision, flexibility, and multi-modal guidance matter:

- Concept Art & Storyboarding: Combine rough sketches with depth cues and text prompts like “cyberpunk alley at night” to generate consistent, structured scenes.

- Product Design: Guide mockup generation using a hand-drawn sketch (local control) and a brand’s visual reference image (global control) to ensure both form and style alignment.

- Creative Scene Composition: Mix conditions from disparate sources—e.g., a deer mask, a sofa edge map, a forest depth map, and a snowy reference image—to synthesize “a deer lounging on a sofa in a snowy forest,” something nearly impossible with text alone.

- Architectural Visualization: Use floorplan edges + depth maps + style references to generate photorealistic interior renders guided by both geometry and aesthetic.

In all these cases, Uni-ControlNet delivers higher fidelity to the intended layout and style than text-only or single-condition alternatives.

Getting Started: From Demo to Custom Training

Uni-ControlNet is designed for both quick experimentation and custom adaptation:

- Environment Setup: Create a conda environment using the provided

environment.yaml. - Pretrained Model: Download the official checkpoint (based on Stable Diffusion v1.5) and place it in

./ckpt/. - Interactive Demo: Run

python src/test/test.pyto launch a Gradio interface supporting seven local conditions (Canny, HED, OpenPose, etc.) and global content conditioning. - Custom Training (Optional):

- Prepare your data in the required folder structure (

images/,conditions/condition-N/, andanno.txt). - Use included annotators to extract condition maps (e.g., edge detectors).

- Train local and global adapters separately using

src/train/train.py. - Merge the two trained adapters into a unified model using the integration script.

- Prepare your data in the required folder structure (

This modular workflow makes it easy to adapt Uni-ControlNet to domain-specific tasks without deep infrastructure investment.

Limitations and Practical Considerations

While powerful, Uni-ControlNet has a few constraints to keep in mind:

- Base Model Dependency: It currently supports Stable Diffusion v1.5. Using it with SDXL or newer variants would require re-implementation or adaptation.

- Condition Map Quality: Generation quality depends heavily on the accuracy of input condition maps (e.g., clean edges, correct depth). Users must either use the provided annotators or supply high-quality external maps.

- Training Workflow: Local and global adapters must be trained independently and then integrated—a minor extra step, but one that requires careful path management.

- Data Organization: Custom training demands strict alignment between image IDs, annotations, and condition files across directories.

These are manageable trade-offs given the framework’s flexibility and efficiency gains.

Strategic Value for Technical Decision-Makers

For teams evaluating controllable image generation solutions, Uni-ControlNet offers a compelling total cost of ownership advantage:

- Reduced Model Proliferation: Replace multiple single-condition models with one unified system.

- Lower Compute Overhead: Fine-tune only two small adapters instead of full models.

- Future-Proof Composability: New control signals can be added without retraining existing components.

- Easier Deployment: A single inference pipeline handles arbitrary condition combinations.

This makes Uni-ControlNet particularly attractive for creative studios, design tools, AI-powered editing software, and research labs seeking scalable, controllable generation without infrastructure bloat.

Summary

Uni-ControlNet redefines what’s possible in controllable text-to-image synthesis by unifying diverse visual signals under a minimal, efficient architecture. It solves real pain points—imprecise prompts, model fragmentation, and training inefficiency—with an elegant two-adapter design that scales gracefully with complexity. Whether you’re prototyping new visual applications or enhancing existing generative workflows, Uni-ControlNet provides a practical, powerful path toward truly guided image creation.