In today’s fast-evolving AI landscape, most generative systems are built for a single task—whether that’s turning text into images, editing existing visuals, or describing images with words. This fragmented approach forces teams to manage multiple models, each with its own training pipeline, dependencies, and maintenance overhead. Versatile Diffusion (VD) changes this paradigm by offering the first unified multi-flow multimodal diffusion framework that seamlessly handles text-to-image, image-to-text, image variation, and text variation—all within a single model.

Developed by researchers at SHI Labs and introduced in the 2022 paper “Versatile Diffusion: Text, Images and Variations All in One Diffusion Model,” VD represents a significant step toward Universal Generative AI. Instead of deploying separate models for each modality or direction of generation, Versatile Diffusion integrates shared, swappable components that dynamically adapt based on input type and task. This architecture not only reduces engineering complexity but also unlocks novel capabilities that aren’t possible with isolated single-task models.

Why One Model Matters: Solving Fragmentation in Generative Workflows

Traditional diffusion pipelines—like those in DALL·E 2 or Stable Diffusion—are optimized for one-way generation, typically from text to image. To go in reverse (e.g., generate a caption from an image) or to support creative editing (e.g., blend two images with a text prompt), teams often stitch together multiple models or build custom pipelines. This leads to:

- Redundant infrastructure: Multiple models consume more memory, storage, and compute.

- Inconsistent outputs: Different models may use incompatible latent spaces or tokenization schemes.

- Limited cross-modal reasoning: You can’t easily disentangle style from content or fuse semantic cues from both image and text without bespoke engineering.

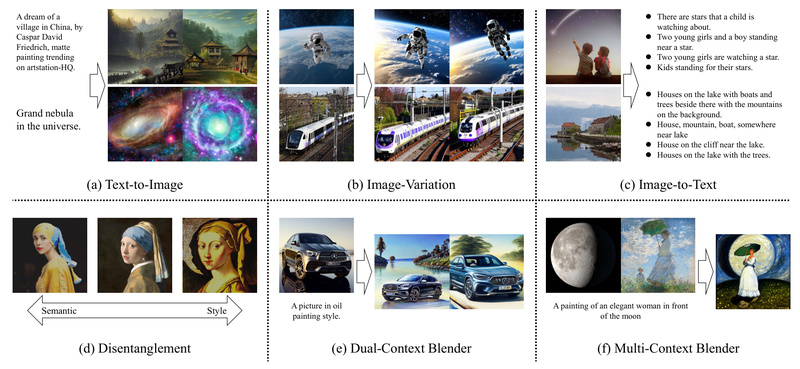

Versatile Diffusion directly addresses these pain points by unifying four core flows into one coherent system:

- Text-to-Image (T2I): Generate images from natural language prompts.

- Image-to-Text (I2T): Produce descriptive or creative captions from input images.

- Image Variation (I2I): Create diverse, high-quality variants of a given image.

- Text Variation (T2T): Rewrite or paraphrase input text while preserving meaning.

Crucially, these aren’t separate models glued together—they’re native capabilities of a single architecture with shared global layers and modular data/context encoders.

Advanced Features That Go Beyond Basic Generation

Beyond standard tasks, Versatile Diffusion enables creative and research-oriented applications that stem from its unified design:

Semantic-Style Disentanglement

VD can separate an image’s semantic content (what’s depicted) from its style (how it’s rendered). This allows users to, for example, keep the subject of a photo while changing its artistic style—without retraining or fine-tuning.

Multi-Context Blending

The built-in WebUI supports dual- and multi-context image blending, where up to four images (with optional masks) and a text prompt can guide the generation of a single coherent output. This is particularly valuable for concept art, advertising mockups, or UI/UX prototyping where visual consistency across elements is key.

Latent Cross-Modal Editing

Although currently offline in the WebUI, the framework originally supported image-to-text-to-image (I2T2I) editing—allowing users to modify an image by first describing it, editing the description, and regenerating. This hints at powerful future workflows where natural language becomes a universal editing interface for visuals.

Practical Use Cases for Technical Leaders and Product Teams

Versatile Diffusion is especially compelling for organizations that work across multimodal AI product development, including:

- AI-powered content studios that need both image generation and automatic captioning for digital assets.

- R&D teams exploring unified generative models, where testing cross-modal hypotheses (e.g., “Can visual style be transferred via text?”) requires a flexible foundation.

- Startups building creative tools, who benefit from reduced model footprint and the ability to ship multiple features (T2I, I2T, variations) from one codebase.

- Academic researchers investigating disentanglement, multimodal alignment, or universal generative frameworks.

By consolidating capabilities, VD lowers the barrier to experimenting with complex, multi-directional generative tasks—accelerating both prototyping and production deployment.

Getting Started: Simple Setup, Immediate Experimentation

The project is fully open-source and comes with a user-friendly WebUI (app.py) that makes experimentation accessible even to engineers without deep diffusion expertise.

Setup in Minutes

After cloning the repository from https://github.com/SHI-Labs/Versatile-Diffusion, you can set up the environment with standard Python and PyTorch commands. The project supports both full-precision (float32) and half-precision (float16) pretrained models—offering a trade-off between memory usage and fidelity.

Pretrained Models Ready to Use

The required model files (vd-four-flow-v1-0.pth and its -fp16 variant, along with VAE checkpoints) are available on Hugging Face. Once downloaded into the pretrained/ folder, launching the WebUI with python app.py gives instant access to:

- Text-to-image generation

- Image variation with semantic focus

- Dual- and multi-context blending

Note: The I2T2I editing feature is temporarily disabled in the current WebUI, but the underlying model still supports core I2T and T2I flows.

Current Limitations and Considerations

While Versatile Diffusion is groundbreaking in its unified approach, decision-makers should consider the following:

- Modality scope: As of now, VD supports only images and text. Future versions aim to incorporate audio, video, and 3D—but those are not yet available.

- Dataset dependency: Training relies on a filtered subset of LAION2B-en, which may influence domain coverage (e.g., bias toward English-language web content).

- Feature completeness: Some advanced capabilities (like latent I2T2I editing) are not currently exposed in the WebUI, though they may be accessible via direct API use.

These constraints don’t diminish VD’s value for image-text workflows—but they do set realistic expectations for teams evaluating it for production use.

Summary

Versatile Diffusion stands out not just for what it does, but how it rethinks the architecture of generative AI. By unifying text and image generation, understanding, and variation into a single, modular framework, it eliminates the need for task-specific models and unlocks creative synergies between modalities. For project leaders, researchers, and engineering teams looking to build or experiment with multimodal generative systems, VD offers a powerful, open-source foundation that reduces complexity while expanding creative and technical possibilities.