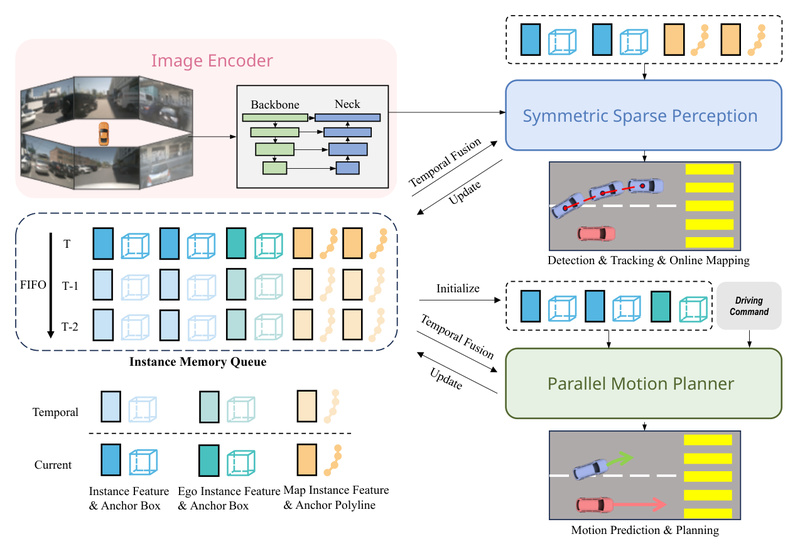

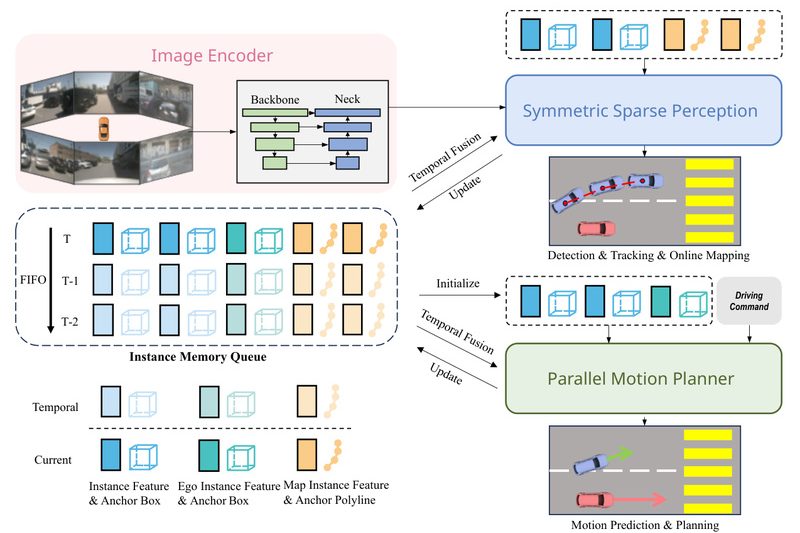

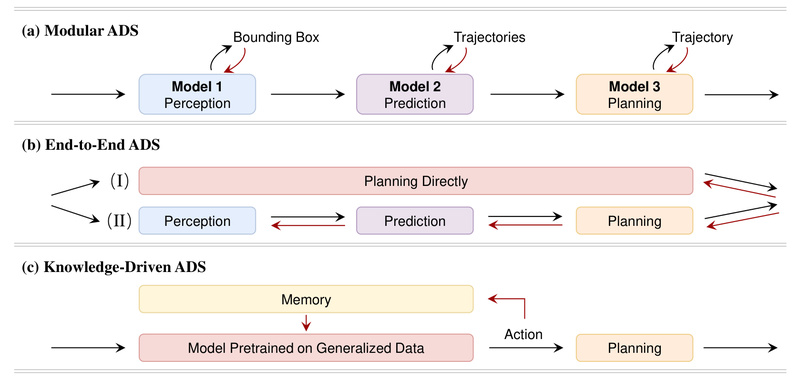

End-to-end autonomous driving systems promise a streamlined alternative to traditional modular pipelines—where perception, prediction, and planning are handled by separate…

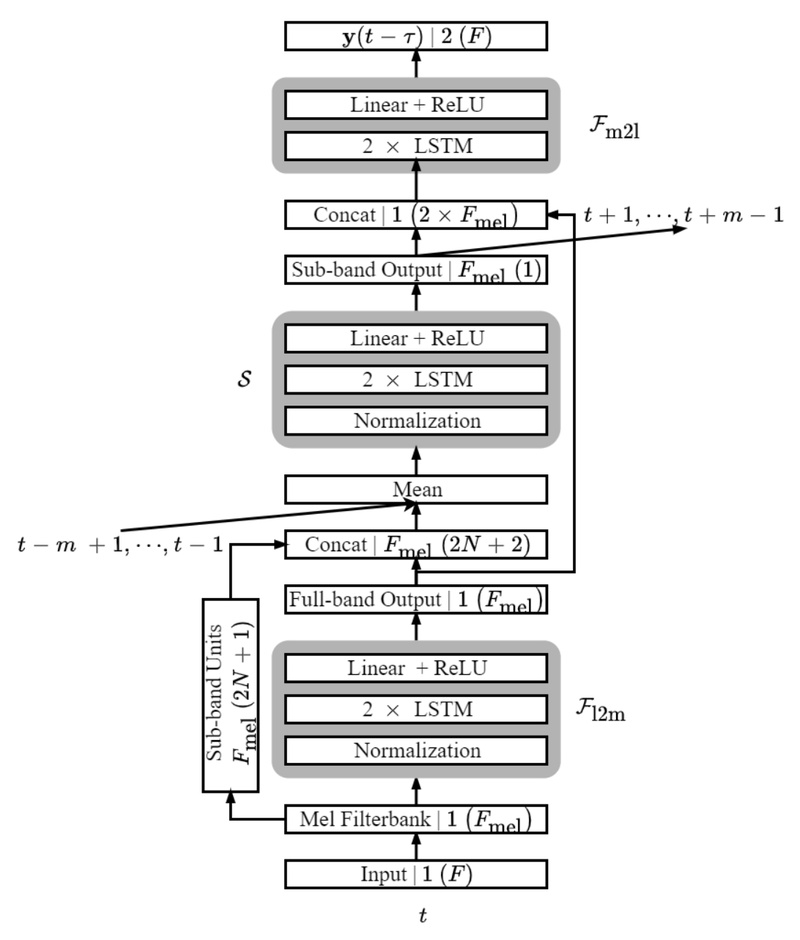

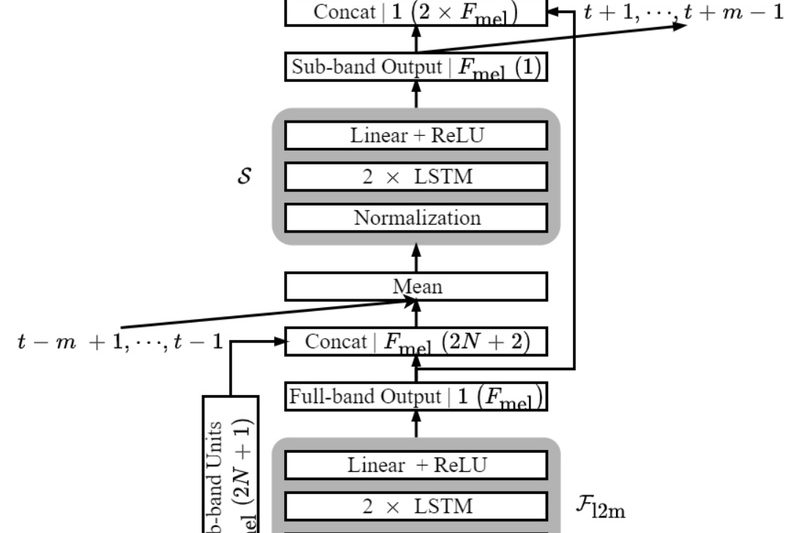

Fast FullSubNet: Real-Time Speech Enhancement with Minimal Latency and Power Consumption for Edge Devices 586

Fast FullSubNet addresses a critical challenge in modern audio applications: delivering high-quality, real-time speech enhancement on devices with strict constraints…

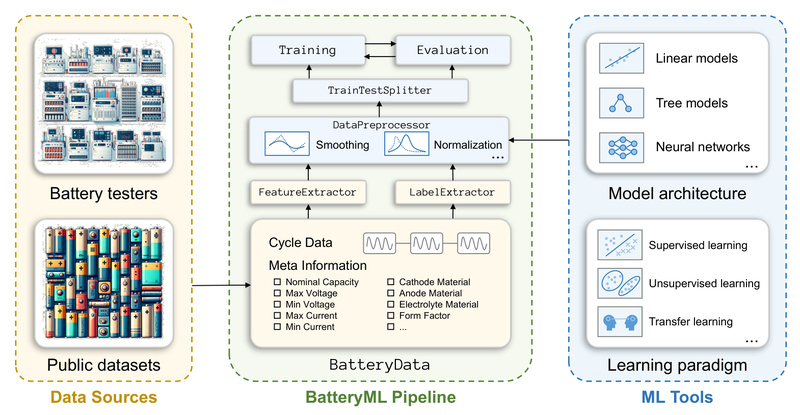

BatteryML: Accelerate Battery Degradation Prediction with an All-in-One Open-Source ML Platform 673

Battery degradation is a critical bottleneck in the deployment of electric vehicles (EVs), grid-scale energy storage, and portable electronics. Engineers…

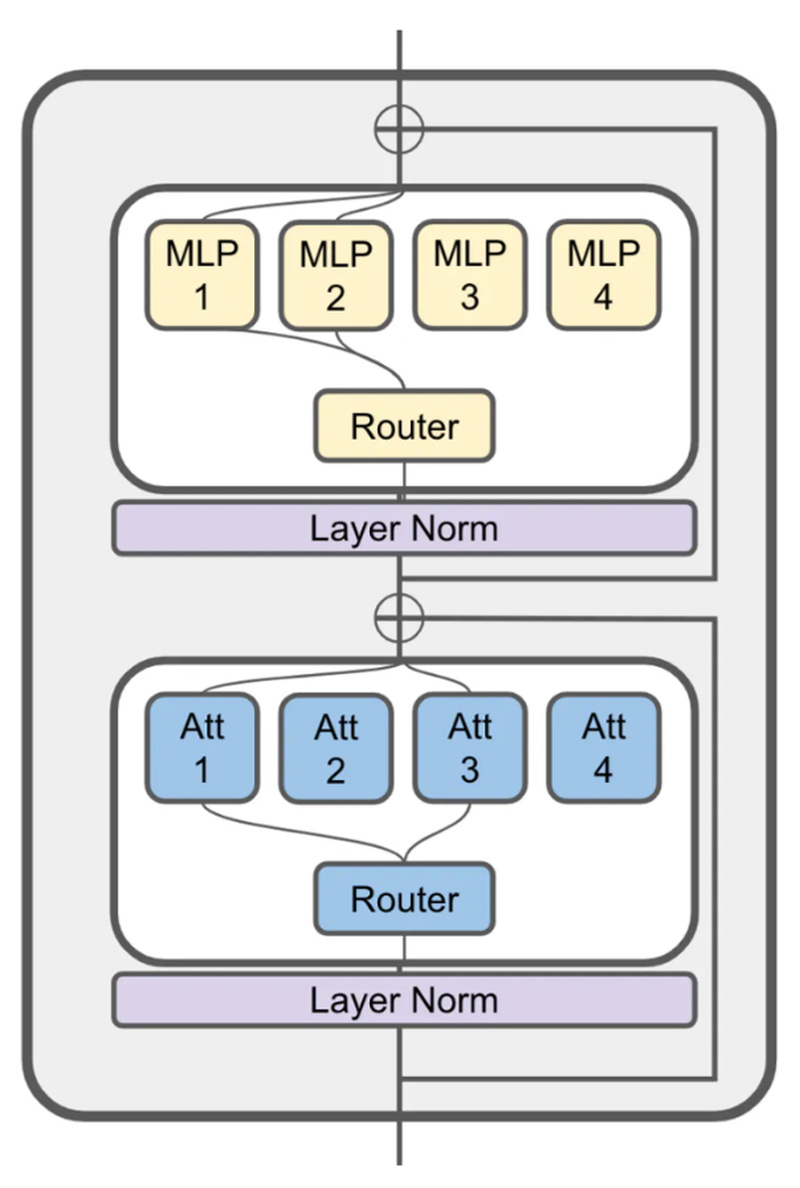

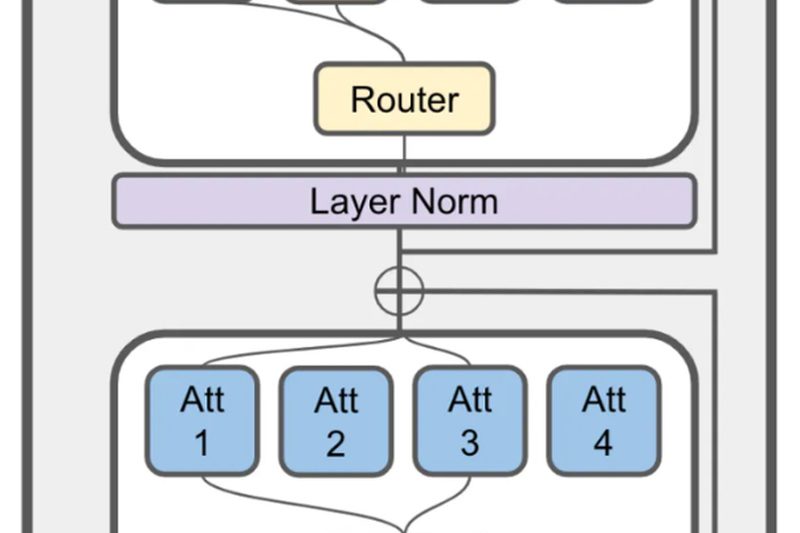

JetMoE: High-Performance LLMs Under $100K—Open, Efficient, and Accessible 985

Building powerful language models used to be the exclusive domain of well-funded tech giants. But JetMoE is changing that narrative.…

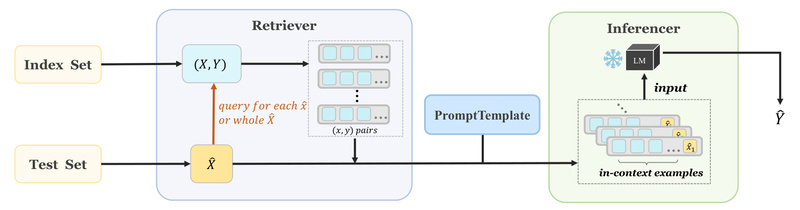

OpenICL: Simplify In-Context Learning for LLM Evaluation Without Retraining 583

Evaluating large language models (LLMs) on new tasks traditionally requires fine-tuning—a process that’s time-consuming, resource-intensive, and often impractical when labeled…

LimSim Series: Validate and Improve Autonomous Driving Systems with Realistic, Long-Term Urban Simulation 536

Validating autonomous driving systems (ADS) in realistic, complex urban environments is notoriously difficult. Real-world testing is expensive, risky, and often…

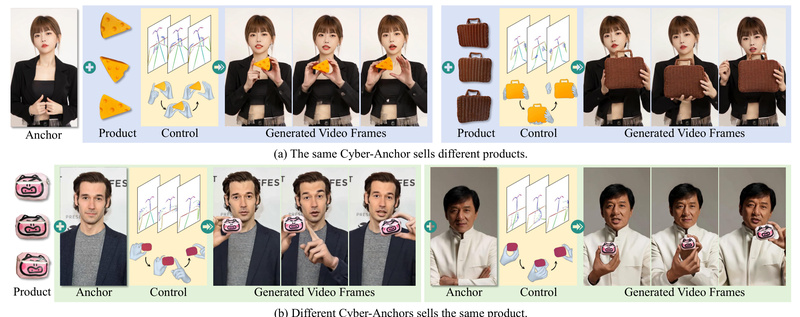

AnchorCrafter: Generate Realistic Product Promotion Videos with AI-Powered Human-Object Interaction 643

In the fast-evolving world of e-commerce and digital marketing, brands are under constant pressure to produce high-quality, engaging promotional videos—fast…

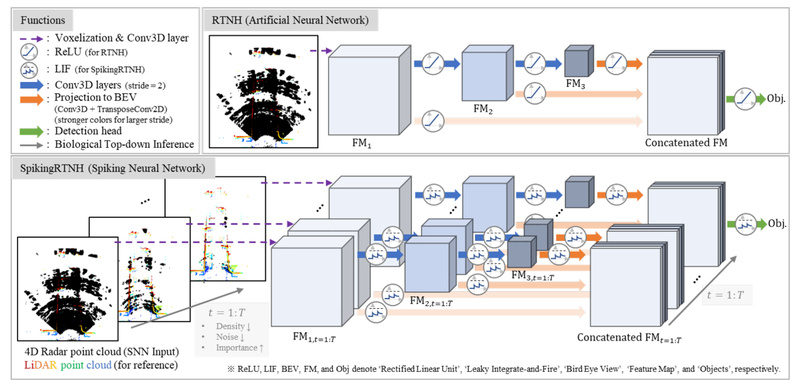

SpikingRTNH: Energy-Efficient 4D Radar Object Detection for Autonomous Vehicles in All Weather Conditions 507

Autonomous driving systems demand robust, real-time perception under all environmental conditions—but traditional deep learning models struggle with the high computational…

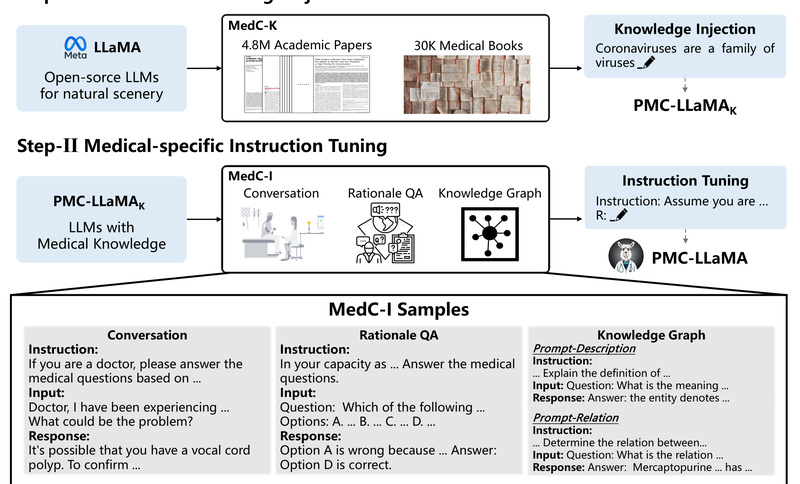

PMC-LLaMA: An Open-Source Medical LLM That Outperforms ChatGPT on Clinical Accuracy 673

PMC-LLaMA is an open-source large language model explicitly engineered for the medical domain. Unlike general-purpose LLMs—such as LLaMA-2 or even…

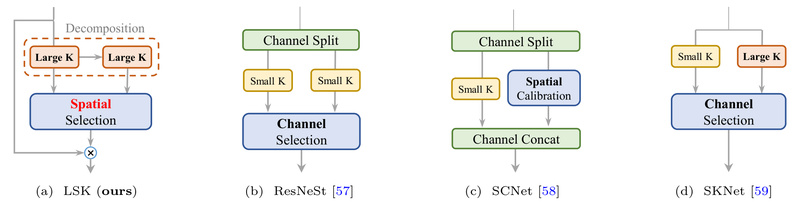

LSKNet: A Lightweight, High-Performance Backbone for Remote Sensing Object Detection, Segmentation, and Classification 639

Remote sensing imagery—captured from satellites, drones, or aircraft—presents unique challenges for computer vision systems. Objects are often small, densely packed,…