Imagine building a system that can understand 3D objects as intuitively as humans do—recognizing a chair from its point cloud, describing it in natural language, and matching it to 2D images, all without requiring costly human-labeled 3D-language pairs. That’s the promise of ULIP-2, a state-of-the-art multimodal pre-training framework introduced by Salesforce Research and accepted at CVPR 2024.

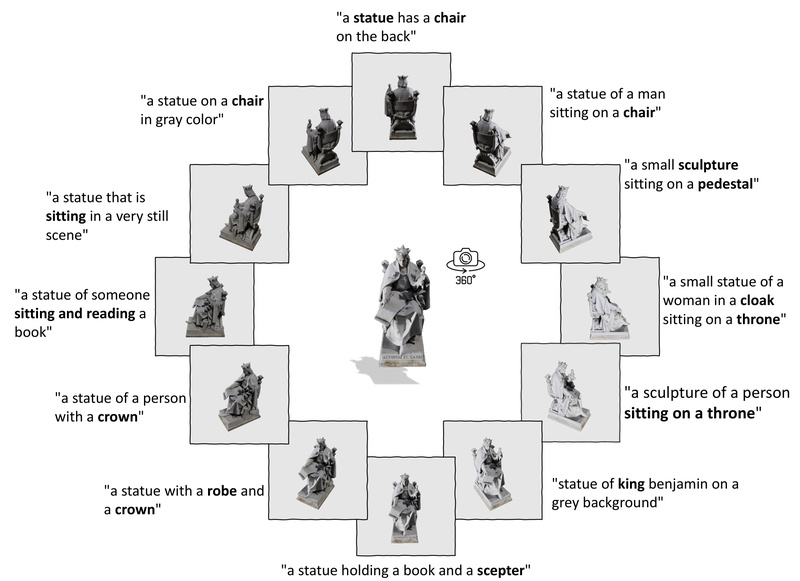

ULIP-2 unifies 3D point clouds, 2D images, and natural language into a single, scalable learning pipeline. Unlike earlier approaches that rely on manually curated or limited textual descriptions for 3D shapes, ULIP-2 leverages large multimodal models to automatically generate rich, holistic language descriptions directly from raw 3D data. This eliminates the need for human annotation entirely, making it possible to pre-train on massive 3D datasets like Objaverse and ShapeNet at scale.

For practitioners in robotics, AR/VR, industrial automation, or 3D content retrieval, ULIP-2 offers a practical path to high-performance 3D understanding—whether you’re doing zero-shot classification, fine-tuning on downstream tasks, or even generating captions from 3D scans.

Why ULIP-2 Stands Out

Fully Automated, Annotation-Free Pre-Training

Traditional multimodal 3D learning methods hit a ceiling due to the scarcity of aligned 3D-language data. Human-annotated descriptions are expensive, inconsistent, and hard to scale. ULIP-2 sidesteps this bottleneck by using powerful vision-language models to synthesize diverse, context-aware textual descriptions from 3D shapes alone—no labels required.

This automation enables training on millions of 3D objects, dramatically expanding the scope and diversity of multimodal 3D learning.

Model-Agnostic Architecture

ULIP-2 isn’t tied to a single 3D backbone. It supports multiple popular architectures out of the box:

- PointNet++ (SSG)

- PointBERT

- PointMLP

- PointNeXt

This plug-and-play design means you can integrate ULIP-2 into your existing 3D pipeline without overhauling your model architecture. Want to use your own custom 3D encoder? ULIP-2 provides clear templates and interfaces to do so with minimal code changes.

Strong Performance Across Multiple Tasks

ULIP-2 delivers impressive results across three key scenarios:

- Zero-shot 3D classification: Achieves 50.6% top-1 accuracy on Objaverse-LVIS and 84.7% on ModelNet40—state-of-the-art without any task-specific fine-tuning.

- Fine-tuned classification: Reaches 91.5% overall accuracy on ScanObjectNN with a compact model of just 1.4 million parameters.

- 3D-to-language generation: Enables captioning of 3D objects, opening doors for accessibility and semantic search in 3D repositories.

These results demonstrate that ULIP-2 doesn’t just scale—it delivers real-world utility.

Real-World Applications

ULIP-2 shines in environments where labeled 3D data is scarce but multimodal reasoning is essential:

- Robotics perception: Robots can identify and reason about novel 3D objects in real time using zero-shot capabilities, without prior exposure to labeled examples.

- AR/VR asset management: Automatically classify and tag 3D models in large libraries (e.g., game assets or architectural components) using natural language queries.

- Industrial inspection: Detect anomalies or categorize parts on a production line by comparing scanned point clouds against a multimodal knowledge base.

- 3D content retrieval: Search massive 3D model repositories using text (e.g., “modern ergonomic office chair”) and retrieve semantically relevant results.

In all these cases, ULIP-2 reduces dependency on manual labeling while improving generalization across object categories and domains.

Solving Industry Pain Points

ULIP-2 directly addresses three persistent challenges in 3D AI:

- Label scarcity: By removing the need for human-annotated 3D-language pairs, it slashes data preparation costs and time.

- Cold-start problems: Zero-shot classification allows immediate deployment on new object categories without retraining.

- Integration friction: Its model-agnostic design ensures compatibility with existing 3D pipelines, lowering adoption barriers.

This makes ULIP-2 particularly valuable for teams with limited annotation budgets or those operating in fast-changing domains where pre-labeled datasets quickly become obsolete.

Getting Started

ULIP-2 is designed for practical, hands-on use:

- Access models and data: All pre-trained models and tri-modal datasets (3D point clouds + images + text) are now hosted on Hugging Face at

https://huggingface.co/datasets/SFXX/ulip. - Set up the environment: The codebase supports PyTorch 1.10.1 with CUDA 11.3 and provides Conda installation instructions.

- Run inference or fine-tuning: Use provided scripts to evaluate zero-shot performance on ModelNet40 or fine-tune on custom datasets like ScanObjectNN.

- Plug in your backbone: Add your own 3D encoder by following the

customized_backbonetemplate and updating the feature dimension in the ULIP wrapper.

The framework includes pre-processed datasets (e.g., ShapeNet-55, ModelNet40) and initialization weights for image-language models, streamlining the setup process.

Limitations and Considerations

While ULIP-2 offers significant advantages, adopters should note:

- Image modality dependency: ULIP-2 uses pre-rendered 2D RGB views of 3D objects. Generating these views requires rendering infrastructure or access to existing image renders.

- Pre-training compute demands: Training from scratch requires access to multi-GPU systems (e.g., 8×A100), though inference is lightweight and feasible on standard hardware.

- Data licensing: The underlying datasets (Objaverse and ShapeNet) come with their own usage terms. Objaverse uses ODC-BY 1.0; ShapeNet follows its original academic license. Always verify compliance before commercial deployment.

These constraints are manageable for most research and industrial applications, especially when leveraging the provided pre-trained checkpoints.

Summary

ULIP-2 redefines scalable 3D understanding by unifying point clouds, images, and language in a fully automated, annotation-free framework. Its combination of zero-shot capability, backbone flexibility, and strong empirical performance makes it a compelling choice for anyone working with 3D data in real-world settings. Whether you’re building intelligent robots, organizing 3D asset libraries, or developing next-generation AR experiences, ULIP-2 offers a practical, future-ready foundation—no manual labeling required.

With code, models, and datasets openly available, now is the ideal time to explore how ULIP-2 can accelerate your 3D AI initiatives.