The rapid evolution of AI-driven image generation has unlocked incredible creative potential—but often at a steep cost: slow inference, massive compute demands, and rigid workflows. For technical decision-makers building real-world applications in design, media, or interactive AI tools, these bottlenecks can halt innovation before it even begins.

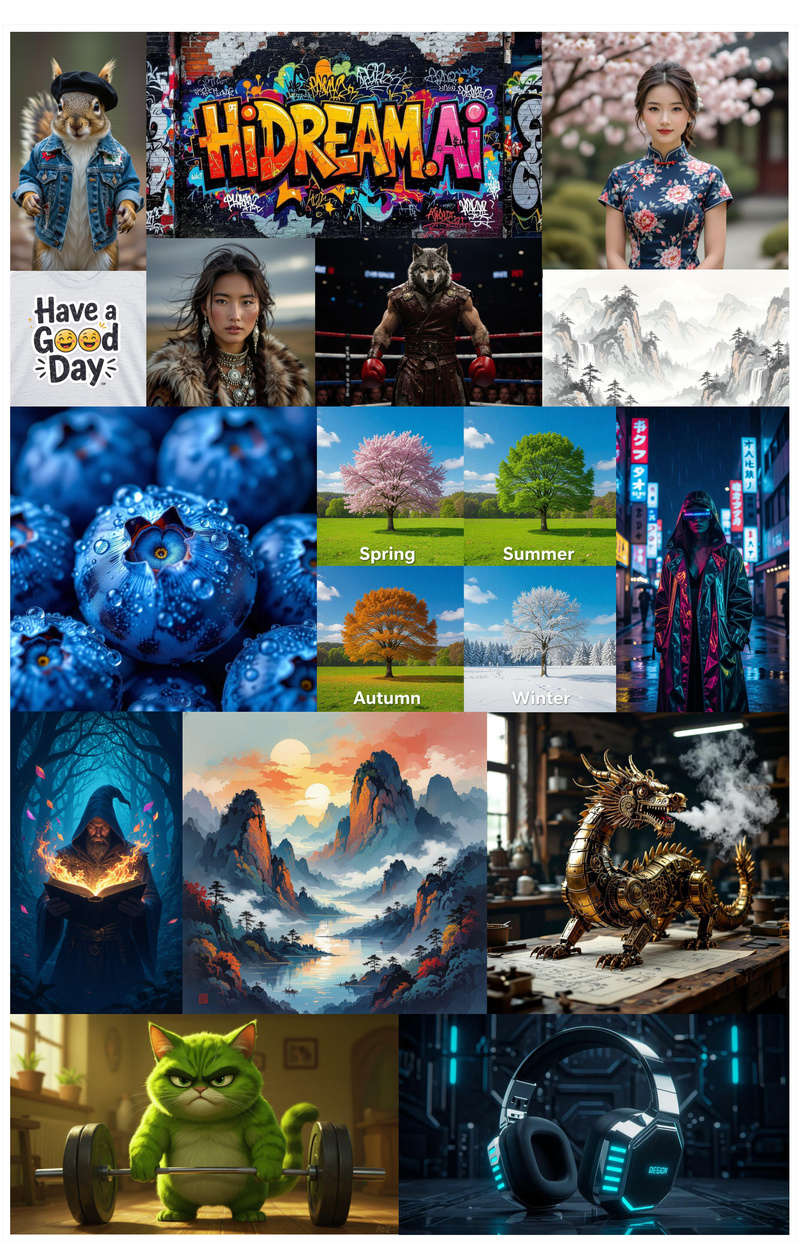

Enter HiDream-I1: a high-efficiency image generative foundation model that delivers state-of-the-art visual quality in seconds, not minutes. Built on a novel Sparse Diffusion Transformer (DiT) architecture, HiDream-I1 redefines what’s possible by breaking the traditional trade-off between speed and fidelity. Even more powerful, it extends beyond text-to-image generation into precise, instruction-based image editing through HiDream-E1, and ultimately forms a fully interactive image agent (HiDream-A1).

All model variants—HiDream-I1-Full, -Dev, and -Fast—are open-sourced, making this a rare blend of cutting-edge performance, flexibility, and accessibility for both research and production.

Why Speed and Quality No Longer Have to Compete

Most high-fidelity generative models today rely on dense architectures that scale poorly with resolution and latency. HiDream-I1 solves this with a sparse DiT structure enhanced by dynamic Mixture-of-Experts (MoE). This design enables the model to activate only the most relevant parameters during inference, dramatically reducing computation without sacrificing output quality.

The result? You get photorealistic or stylized images generated in seconds—even on reasonable hardware—making it viable for iterative prototyping, real-time applications, and scalable deployment.

Key Capabilities That Make HiDream-I1 Stand Out

Three Variants for Every Deployment Scenario

HiDream-I1 isn’t a one-size-fits-all model. It comes in three configurations:

- HiDream-I1-Full: Maximum quality for high-stakes outputs.

- HiDream-I1-Dev: Balanced performance for development and testing.

- HiDream-I1-Fast: Optimized for low-latency use cases like live previews or mobile-edge scenarios.

This tiered approach lets teams match model capability to hardware constraints and product requirements.

Dual-Stream Architecture for Efficient Multimodal Processing

At its core, HiDream-I1 uses a dual-stream decoupled design: one encoder processes text tokens, another handles image tokens—independently and in parallel. Only later do they merge in a single-stream sparse DiT for cross-modal interaction. This separation minimizes redundant computation and accelerates convergence during generation.

From Generation to Editing: The HiDream-E1 Advantage

HiDream-I1 isn’t just about creating images from text—it evolves into HiDream-E1, an instruction-based image editing model. Instead of relying on masks or complex UIs, users provide natural language prompts like:

“Convert the image into a Ghibli style.”

HiDream-E1 interprets the instruction and applies precise edits while preserving structural integrity and semantic coherence.

Notably, HiDream-E1.1 (released July 2025) supports dynamic resolution (up to 1M pixels) and direct prompting—no need for structured templates or external refinement. Earlier versions (HiDream-E1-Full) require prompts in the format:

“Editing Instruction: {instruction}. Target Image Description: {description}”

But even then, a built-in instruction_refinement.py script can auto-generate high-quality descriptions using a VLM (e.g., via vLLM or OpenAI API).

Toward a Full Image Agent: HiDream-A1

By unifying text-to-image generation and instruction-guided editing, HiDream-I1 lays the foundation for HiDream-A1—a conversational, interactive image agent capable of iterative creation and refinement through user feedback. This opens doors for AI co-pilots in creative workflows.

Real-World Applications for Technical Teams

HiDream-I1 shines in scenarios where speed, precision, and ease of integration matter:

- Marketing & Media: Rapidly generate campaign visuals or adapt stock photos to brand guidelines via text prompts.

- Design Prototyping: Iterate on UI mockups, product concepts, or character designs without manual redrawing.

- AI-Powered Photo Editors: Build consumer apps that let users edit photos with simple instructions—no design skills required.

- Research & Education: Experiment with multimodal generative modeling using open weights and clean inference pipelines.

Because editing is instruction-driven—not pixel-mask-dependent—it drastically lowers the barrier for non-technical users while giving developers fine-grained control.

Getting Started: Open Source and Ready to Run

HiDream-I1 and HiDream-E1 are fully open-sourced on GitHub:

- HiDream-I1: github.com/HiDream-ai/HiDream-I1

- HiDream-E1: github.com/HiDream-ai/HiDream-E1

Quick Setup

Ensure you have CUDA 12.4 and install dependencies:

pip install -r requirements.txt pip install -U flash-attn --no-build-isolation pip install -U git+https://github.com/huggingface/diffusers.git

Run Inference

For HiDream-E1.1 (dynamic resolution, direct prompts):

python ./inference_e1_1.py

For HiDream-E1-Full:

python ./inference.py

A refine_strength parameter lets you balance editing vs. refinement: during the first (1 - refine_strength) denoising steps, the model performs the core edit; the remainder uses HiDream-I1-Full for img2img enhancement. Set to 0.0 to skip refinement.

Try It Interactively

Gradio demos are included:

gradio_demo_1_1.pyfor HiDream-E1.1gradio_demo.pyfor HiDream-E1-Full

Note: The scripts automatically download meta-llama/Llama-3.1-8B-Instruct. You must accept Meta’s license on Hugging Face and run huggingface-cli login.

Important Limitations and Considerations

While powerful, HiDream-I1 comes with practical constraints:

- Active Development: The codebase and models are under frequent updates—expect changes.

- Prompt Formatting: HiDream-E1-Full requires structured prompts unless you use the instruction refinement script (which needs a VLM API key). HiDream-E1.1 removes this friction.

- Hardware Dependencies: Requires CUDA 12.4 and FlashAttention for optimal performance.

- Llama-3.1 Dependency: Automatic download of the Llama model necessitates Hugging Face login and license agreement.

These factors should be evaluated against your team’s infrastructure and compliance policies.

Benchmark-Proven Editing Accuracy

HiDream-E1 isn’t just fast—it’s accurate. On the EmuEdit and ReasonEdit benchmarks, HiDream-E1.1 achieves state-of-the-art results:

- 7.57 average on EmuEdit (vs. 5.99 for Gemini-2.0-Flash)

- 7.70 on ReasonEdit (top among all listed models)

This validates its ability to handle diverse editing tasks: style transfer, object addition/removal, background changes, and color adjustments—all from natural language.

Summary

HiDream-I1 represents a strategic leap for teams needing high-quality, fast, and editable image generation without vendor lock-in. Its sparse architecture delivers efficiency; its open weights enable transparency and customization; and its evolution into HiDream-E1 and HiDream-A1 future-proofs your investment in multimodal AI.

For technical decision-makers, this isn’t just another generative model—it’s a production-ready foundation that accelerates AIGC pipelines while maintaining creative control and computational sanity.