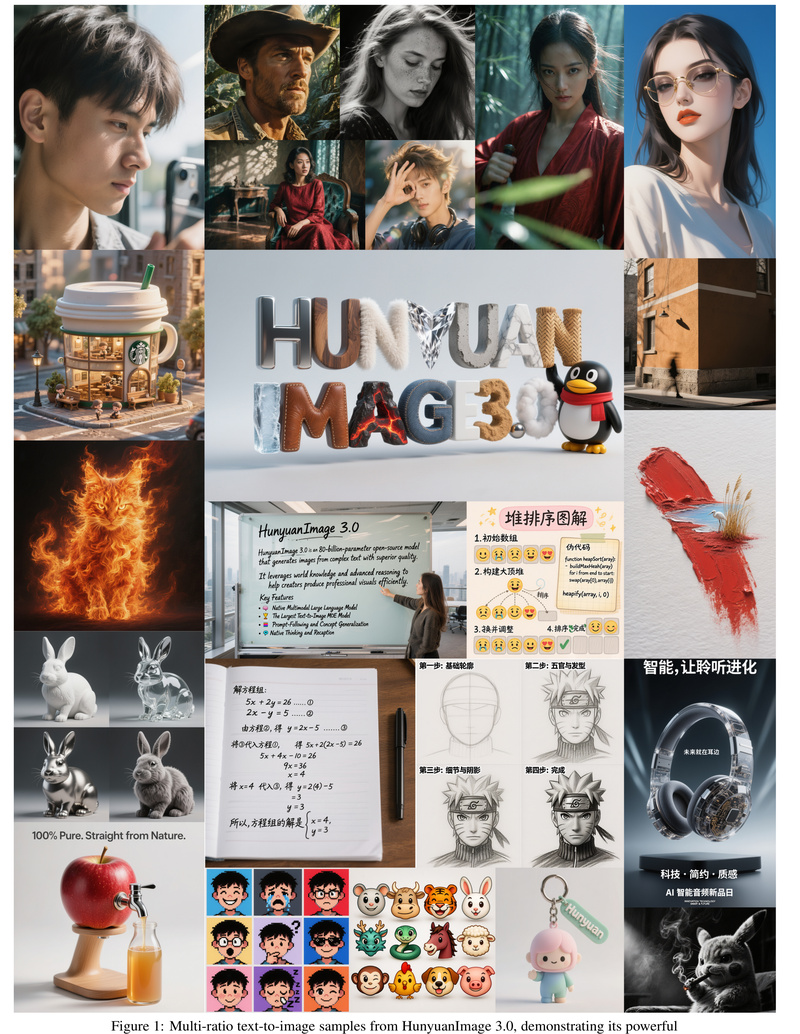

HunyuanImage-3.0 is a groundbreaking open-source image generation model developed by Tencent. Unlike traditional diffusion-based approaches, it builds a native multimodal autoregressive architecture that seamlessly unifies text understanding and image generation within a single framework. With 80 billion total parameters (13 billion activated per token) organized as a Mixture-of-Experts (MoE) system, it is currently the largest open-source image generative model available—and it delivers performance that rivals leading closed-source alternatives.

What makes HunyuanImage-3.0 especially valuable for technical decision-makers is not just its scale, but its practical readiness: the model weights, inference code, and technical documentation are publicly released on GitHub, enabling immediate integration, customization, and evaluation in real-world workflows.

Why HunyuanImage-3.0 Stands Out

Unified Multimodal Autoregressive Design

Most open-source image generators rely on DiT (Diffusion Transformer) or latent diffusion frameworks. HunyuanImage-3.0 takes a different path: it treats image generation as a token-by-token autoregressive prediction task, similar to how language models generate text. This native multimodal approach allows the model to jointly reason over text and visual tokens, leading to stronger semantic alignment between prompts and outputs.

This design also enables intelligent world-knowledge reasoning. When given a sparse prompt like “a dog running on grass,” the model can automatically infer plausible contextual details—breed, lighting, background, perspective—without requiring exhaustive manual prompting.

Mixture-of-Experts at Unprecedented Scale

HunyuanImage-3.0 employs 64 expert networks, activating only 13 billion parameters per token during inference. This MoE structure delivers two critical benefits:

- Higher capacity without linear computational cost: The model leverages specialized experts for different visual concepts (e.g., textures, lighting, composition), improving image fidelity.

- Better generalization across diverse domains: From photorealistic portraits to abstract art or product renders, the model adapts dynamically to the prompt’s demands.

Extensive evaluations—including both automated metrics like SSAE (Structured Semantic Alignment Evaluation) and human GSB (Good/Same/Bad) studies—confirm that HunyuanImage-3.0 achieves state-of-the-art prompt adherence and visual quality among open models.

Practical Use Cases for Technical Teams

HunyuanImage-3.0 excels in scenarios where precision, creativity, and resolution flexibility matter:

- Creative design & advertising: Generate high-fidelity mockups of posters, magazine covers, or digital ads from natural language descriptions.

- Product visualization: Render the same object in multiple materials (e.g., ceramic, glass, metal) with consistent geometry and lighting—ideal for e-commerce or industrial design.

- Narrative illustration: Produce cinematic, multi-character scenes with coherent spatial relationships and mood-appropriate lighting (e.g., film noir street corners or nostalgic interior shots).

- Educational & technical content: Create step-by-step visual guides (e.g., “how to sketch a parrot”) with structured layout and fine-grained detail.

The model supports both automatic and user-specified resolutions, including custom aspect ratios like 16:9 or exact pixel dimensions (e.g., 1280×768). This flexibility removes a common bottleneck in pipeline integration.

Getting Started: From Code to Image

System Requirements

Running HunyuanImage-3.0 demands significant resources:

- OS: Linux

- GPUs: ≥3× NVIDIA A100/H100 (80GB VRAM each); 4× recommended

- Disk: 170GB free space for model weights

- Software: Python 3.12+, PyTorch 2.7.1 (CUDA 12.8)

Quick Inference with Transformers

After downloading the weights from Hugging Face (renaming the folder to avoid dots, e.g., HunyuanImage-3), you can generate images in just a few lines:

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained( "./HunyuanImage-3", trust_remote_code=True, torch_dtype="auto", device_map="auto", attn_implementation="sdpa", # or "flash_attention_2" moe_impl="eager" # or "flashinfer"

)

model.load_tokenizer("./HunyuanImage-3")

image = model.generate_image(prompt="A brown and white dog is running on the grass")

image.save("output.png")

Enhanced Prompting via DeepSeek (Recommended)

The base checkpoint does not rewrite prompts automatically. For best results, use the provided CLI with DeepSeek integration:

export DEEPSEEK_KEY_ID="your_key" export DEEPSEEK_KEY_SECRET="your_secret" python3 run_image_gen.py --model-id ./HunyuanImage-3 --prompt "A cinematic photo of a woman in a vintage armchair" --sys-deepseek-prompt "universal"

This leverages a system prompt to expand sparse inputs into rich, model-friendly descriptions—dramatically improving output quality.

Interactive Demo with Gradio

For rapid prototyping, launch a web UI:

export MODEL_ID=./HunyuanImage-3 sh run_app.sh --moe-impl flashinfer --attn-impl flash_attention_2

Access the interface at http://localhost:443 to experiment interactively.

Performance Optimizations and Current Limitations

Speed Up Inference by 3×

Install two optional libraries:

- FlashAttention 2.8.3: Accelerates attention computation.

- FlashInfer: Optimizes MoE routing and expert selection.

⚠️ Note: The first inference with FlashInfer may take ~10 minutes (kernel compilation), but subsequent runs are dramatically faster.

Key Constraints to Consider

- Hardware-intensive: Not suitable for single-GPU or consumer-grade setups.

- No built-in prompt enhancement in base model: Requires external rewriting (e.g., via DeepSeek) for optimal results.

- Roadmap gaps: Image-to-image generation, multi-turn interaction, and distilled checkpoints are not yet open-sourced.

These limitations are clearly documented in the project’s open-source plan, and the team actively encourages community contributions.

Summary

HunyuanImage-3.0 offers technical teams a rare combination: cutting-edge performance, full architectural transparency, and practical integration pathways. By open-sourcing a model that competes with proprietary systems, Tencent empowers researchers, engineers, and creatives to build, extend, and deploy advanced multimodal applications without vendor lock-in.

If your project demands high-quality, semantically accurate image generation at scale—and you have access to enterprise-grade GPU infrastructure—HunyuanImage-3.0 is a compelling, future-proof foundation to evaluate today.