In the ever-evolving landscape of generative AI, image synthesis has long been dominated by diffusion models—powerful, yet often complex, resource-intensive, and reliant on external components. Enter Lumina-mGPT 2.0, a groundbreaking, decoder-only autoregressive model trained entirely from scratch that redefines what’s possible in image generation. Unlike diffusion-based or hybrid approaches, Lumina-mGPT 2.0 needs no pretrained vision encoders, external tokenizers beyond its own unified scheme, or multi-stage pipelines. It stands alone—both technically and legally—offering high-quality image generation, editing, and dense prediction within a single, flexible framework.

For developers, researchers, and product teams seeking a commercially viable, architecture-liberated, and task-unified solution, Lumina-mGPT 2.0 delivers state-of-the-art results while sidestepping the licensing and architectural constraints common in competing systems.

A Truly Self-Contained Autoregressive Vision Model

What makes Lumina-mGPT 2.0 unique is its complete independence. It is not built on top of existing vision-language backbones or diffusion priors. Instead, it is trained from scratch as a pure autoregressive transformer, enabling unrestricted architectural innovation and freedom from restrictive licenses—a critical advantage for commercial deployment.

This independence doesn’t come at the cost of quality. On standard benchmarks like GenEval and DPG, Lumina-mGPT 2.0 matches or even surpasses leading diffusion models, including DALL·E 3 and SANA. More importantly, it retains the inherent strengths of autoregressive modeling:

- Sequential compositionality: Generate images token-by-token with precise control.

- Natural support for multi-turn editing: Modify images iteratively by appending new tokens.

- Unified token space: Text, image, and metadata all live in the same sequence format.

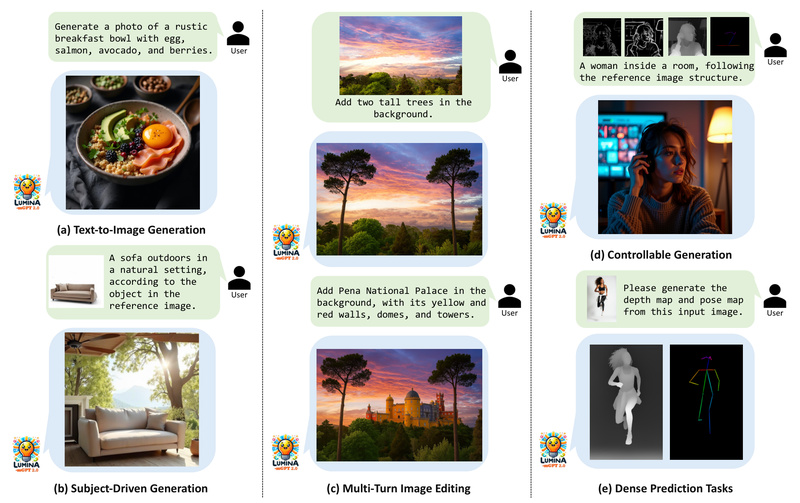

One Model, Many Capabilities

Lumina-mGPT 2.0 eliminates the need for separate models or complex pipelines by supporting a wide range of multimodal tasks out of the box:

- Text-to-image generation (e.g., “a cyberpunk cat wearing neon goggles”)

- Image pair generation (e.g., before/after scenes)

- Subject-driven generation (preserve object identity across contexts)

- Controllable synthesis (e.g., depth-guided, pose-conditioned)

- Multi-turn image editing (iteratively refine or alter parts of an image)

- Dense prediction tasks (e.g., semantic segmentation, depth estimation)

All these are handled within the same generative sequence, using the same parameters and tokenization scheme. This not only simplifies deployment but also enables cross-task generalization—something hybrid or task-specific models struggle with.

For example, in subject-driven generation, you provide a reference image and a new prompt (e.g., “this item on a rooftop at sunset”), and the model seamlessly integrates the subject into the new scene—no fine-tuning or inversion required.

Solving Real-World Adoption Barriers

Traditional generative pipelines often suffer from three major pain points:

- Fragmentation: Needing different models for generation, editing, and control.

- Licensing ambiguity: Uncertainty around commercial use due to dependencies on third-party components.

- Loss of autoregressive flexibility: Diffusion models, while high-quality, lack native support for sequential editing or token-level intervention.

Lumina-mGPT 2.0 directly addresses all three:

- It’s an all-in-one model (“Omni” checkpoint supports both 768px T2I and 512px I2I tasks).

- It’s fully open and self-contained, with clear licensing and no hidden dependencies.

- It preserves autoregressive advantages—ideal for applications requiring iterative refinement or compositional reasoning.

Practical and Accessible Usage

Getting started with Lumina-mGPT 2.0 is straightforward:

- Clone the repo and set up a Python 3.10 environment.

- Install dependencies, including FlashAttention for efficiency.

- Download the MoVQGAN tokenizer checkpoint (a single file).

- Run inference with simple CLI commands.

For text-to-image:

python generate_examples/generate.py --model_path Alpha-VLLM/Lumina-mGPT-2.0 --save_path save_samples/ --cfg 4.0 --top_k 4096 --temperature 1.0 --width 768 --height 768

For image-to-image tasks like depth-guided generation or subject preservation, just add --task i2i and specify the control type and input image path.

Fine-tuning is also supported, enabling domain adaptation or custom task specialization—documented clearly in the TRAIN.md guide.

Speed and Efficiency Through Smart Decoding

Autoregressive models are often criticized for slow inference. Lumina-mGPT 2.0 counters this with two built-in acceleration strategies:

- Speculative Jacobi Decoding: Predicts multiple tokens in parallel during inference, cutting generation time nearly in half.

- Model Quantization: Reduces memory footprint by over 50% without quality loss.

On a single A100 GPU:

- Baseline: 694 seconds, 80 GB VRAM

- With Speculative Jacobi: 324 seconds, 79.2 GB

- With both Jacobi + Quantization: 304 seconds, only 33.8 GB

This makes high-resolution (768px) generation feasible even on limited hardware—crucial for real-world deployment.

Note: Speculative decoding is not recommended for image-to-image tasks due to sensitivity to initial conditions.

Current Limitations to Consider

While powerful, Lumina-mGPT 2.0 isn’t without trade-offs:

- High baseline VRAM usage (~80 GB) without quantization—may be prohibitive for smaller labs.

- Requires manual download of the MoVQGAN tokenizer (not bundled in the model checkpoint).

- Image-to-image tasks do not benefit from speculative decoding, so speedups are limited to T2I scenarios.

These are practical considerations, not fundamental flaws—and the team provides clear guidance to work around them.

Who Should Use Lumina-mGPT 2.0?

This model is ideal for:

- Research teams exploring pure autoregressive vision architectures.

- Product engineers building unified generative systems (e.g., design tools with generation + editing + control).

- Commercial developers needing license-safe, self-contained models for deployment.

- AI practitioners frustrated by the complexity of diffusion pipelines or multi-model orchestration.

If your goal is to simplify your generative stack while maintaining state-of-the-art quality and full architectural control, Lumina-mGPT 2.0 offers a compelling path forward.

Summary

Lumina-mGPT 2.0 proves that autoregressive models can compete with—and even surpass—diffusion systems in image generation, all while offering greater flexibility, composability, and licensing clarity. By unifying diverse tasks into a single, from-scratch trained framework, it removes traditional barriers to adoption and opens new possibilities for controllable, editable, and efficient multimodal generation. With its open codebase, clear documentation, and practical acceleration techniques, it’s not just a research artifact—it’s a production-ready foundation for the next generation of generative AI applications.