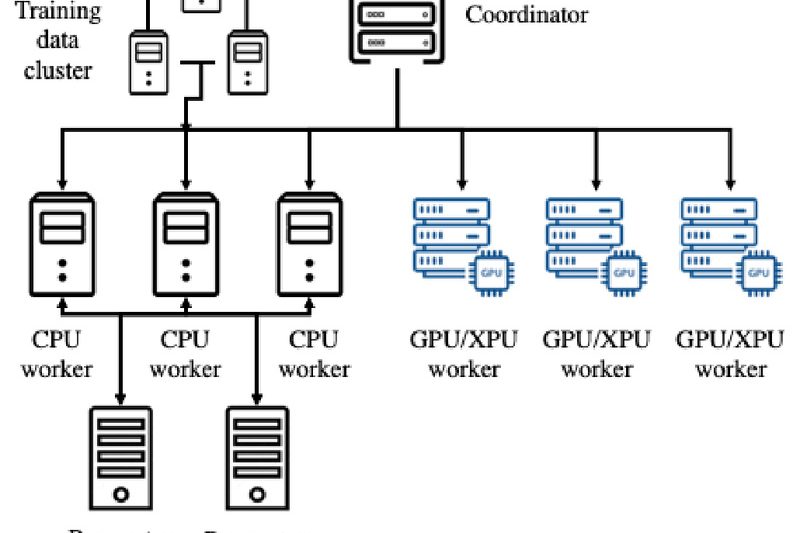

Training large-scale deep neural networks (DNNs) efficiently is a persistent challenge—especially when your infrastructure includes a mix of hardware like…

Video-LLaVA: One Unified Model for Both Image and Video Understanding—No More Modality Silos 3417

If you’re evaluating vision-language models for a project that involves both images and videos, you’ve probably faced a frustrating trade-off:…

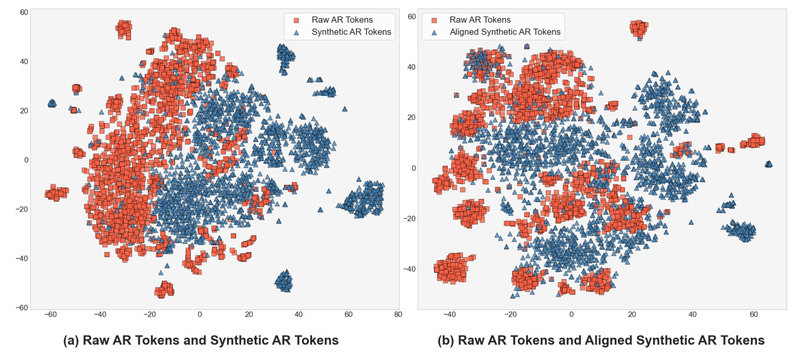

SpeechAlign: Bridging the Gap Between Realistic and Human-Preferred Speech Generation 1396

Recent advances in speech language models (SLMs) have made it possible to generate highly realistic speech—often indistinguishable from human voices…

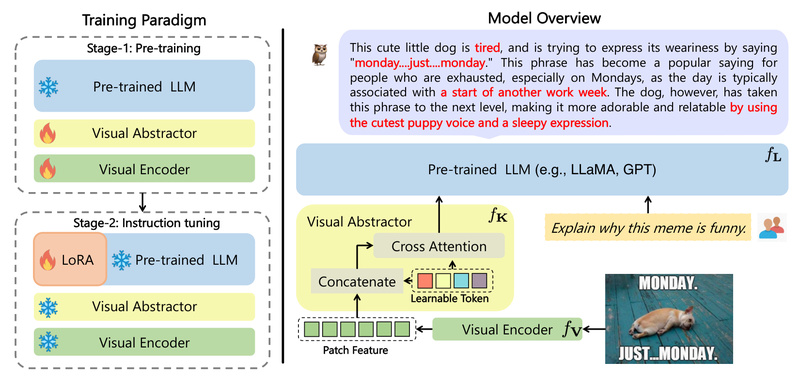

mPLUG-Owl: Modular Multimodal AI for Real-World Vision-Language Tasks 2537

In today’s AI-driven product landscape, the ability to understand both images and text isn’t just a research novelty—it’s a practical…

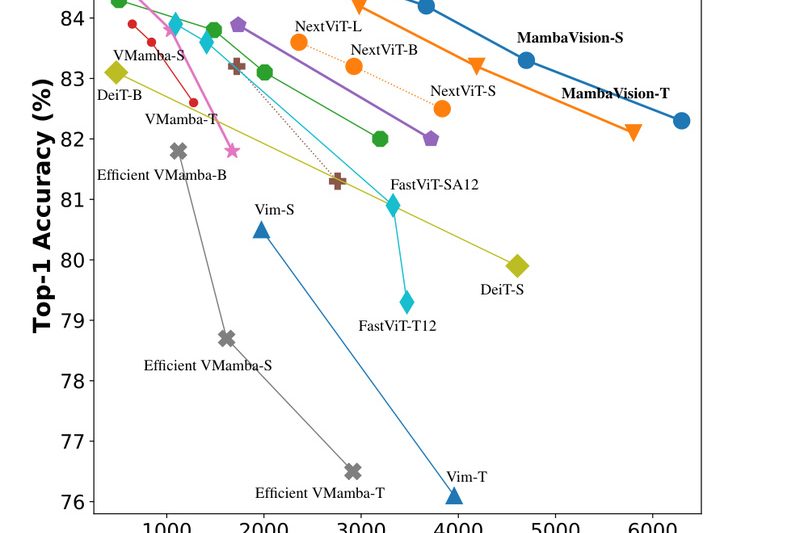

MambaVision: Achieve SOTA Image Classification & Downstream Vision Tasks with Hybrid Mamba-Transformer Efficiency 1946

If you’re building computer vision systems that demand both high accuracy and real-world efficiency—without getting bogged down in architectural complexity—MambaVision…

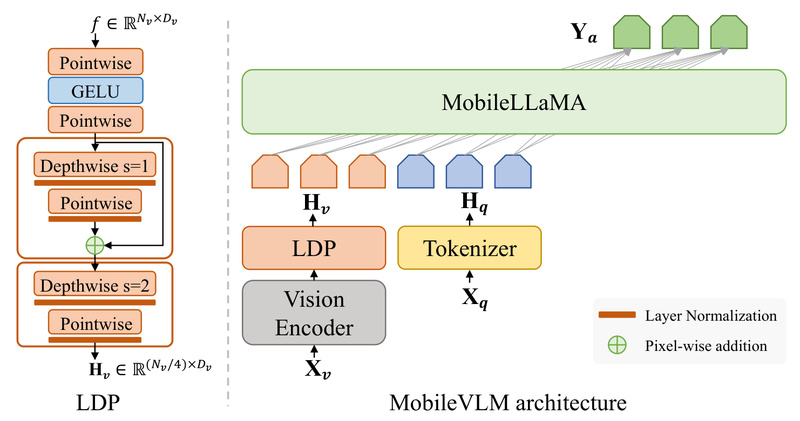

MobileVLM: High-Performance Vision-Language AI That Runs Fast and Privately on Mobile Devices 1314

MobileVLM is a purpose-built vision-language model (VLM) engineered from the ground up for on-device deployment on smartphones and edge hardware.…

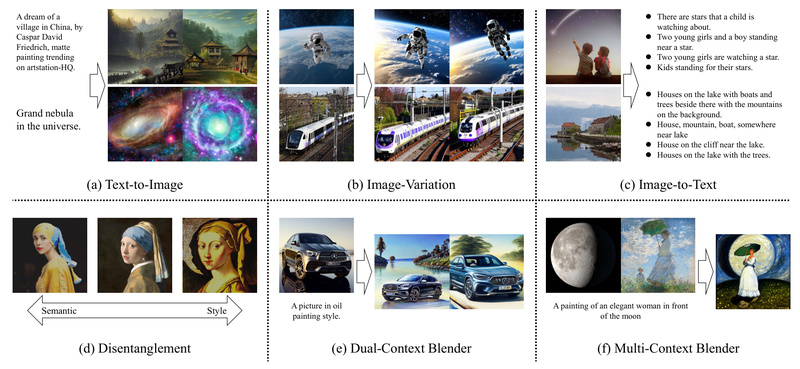

Versatile Diffusion: One Unified Model for Text-to-Image, Image-to-Text, and Creative Variations 1334

In today’s fast-evolving AI landscape, most generative systems are built for a single task—whether that’s turning text into images, editing…

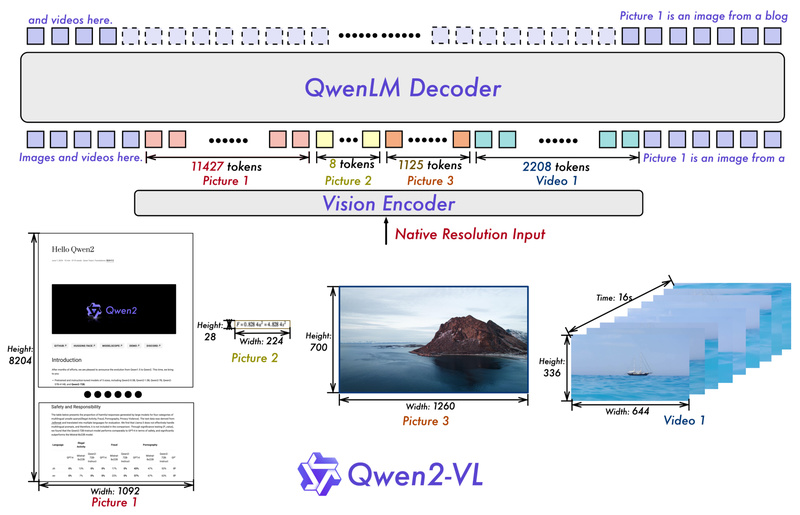

Qwen2-VL: Process Any-Resolution Images and Videos with Human-Like Visual Understanding 17241

Vision-language models (VLMs) are increasingly essential for tasks that require joint understanding of images, videos, and text—ranging from document parsing…

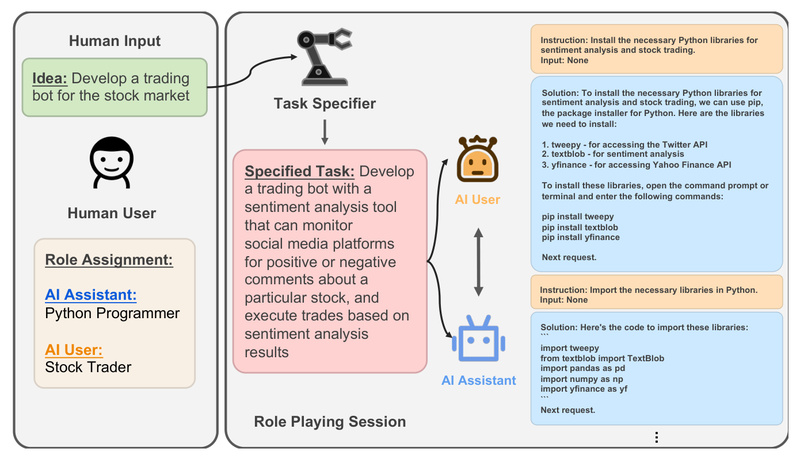

CAMEL: Build Scalable, Autonomous Multi-Agent AI Systems Without Constant Human Oversight 15059

In today’s AI landscape, large language models (LLMs) excel at solving complex tasks—but only when carefully guided by humans. This…

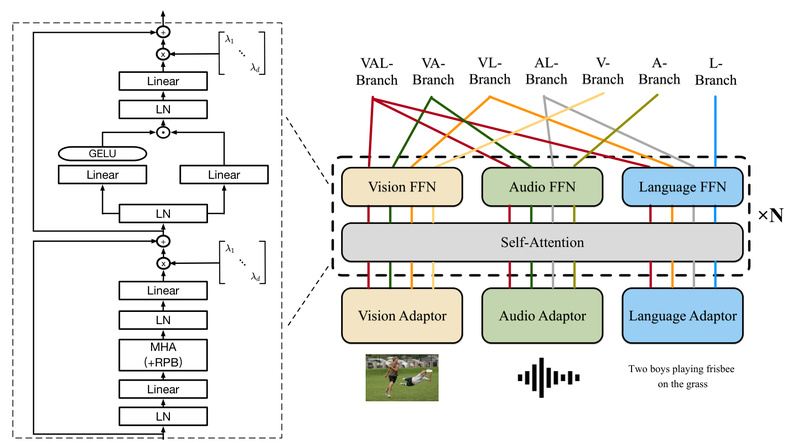

ONE-PEACE: A Single Model for Vision, Audio, and Language with Zero Pretraining Dependencies 1062

In today’s AI landscape, most multimodal systems are built by stitching together specialized models—separate vision encoders, audio processors, and language…