Imagine you have a photo album filled with images of your dog—but you want to automatically isolate your pet in every picture without manually drawing masks or labeling each one. Traditional segmentation models either require extensive training data or constant user prompting. The Segment Anything Model (SAM) changed the game with its promptable architecture, but it still lacks out-of-the-box personalization for specific visual concepts like “your dog,” “your product,” or “that unique chair in your living room.”

Enter Personalize-SAM—a clever, training-free solution that adapts SAM to your custom object using just one image with a single reference mask. No retraining. No complex pipelines. Just instant personalization across new images or even video frames.

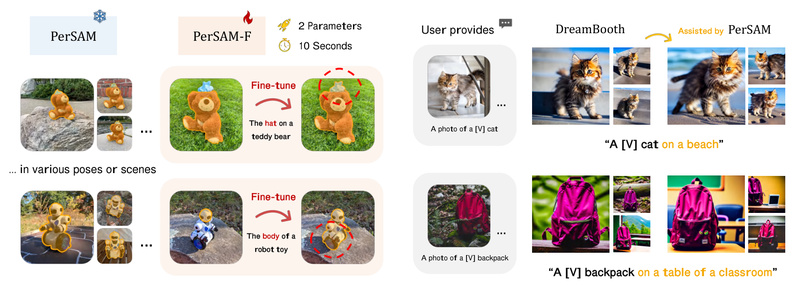

Developed under the paper “Personalize Segment Anything Model with One Shot,” Personalize-SAM (or PerSAM) introduces a lightweight yet powerful framework for zero-shot customization—ideal for product teams, researchers, and developers seeking rapid, practical segmentation without the overhead of model fine-tuning.

Why Personalize-SAM Solves a Real-World Problem

The core challenge in applied computer vision isn’t just segmenting any object—it’s reliably segmenting your specific object across diverse contexts. SAM excels at general-purpose segmentation but treats every prompt independently. If you want consistent segmentation of the same real-world entity—say, a branded product in marketing assets or a pet across social media photos—you’d normally need to manually prompt each image or train a custom model.

Personalize-SAM eliminates both burdens. With only one annotated example (image + mask), it automatically propagates that concept to new scenes using three key mechanisms:

- Target-guided attention: Focuses SAM’s internal attention on features similar to your reference object.

- Target-semantic prompting: Constructs implicit prompts based on the learned semantics of your object.

- Cascaded post-refinement: Sharpens the output mask through iterative refinement.

This enables truly personalized segmentation without a single line of training code.

Key Features That Set Personalize-SAM Apart

1. Training-Free Personalization (PerSAM)

PerSAM requires zero gradient updates. Just provide one reference image with a mask, and it instantly generalizes to new inputs. This makes deployment fast, energy-efficient, and accessible—even on modest hardware.

2. Ultra-Fast Fine-Tuning Variant (PerSAM-F)

For slightly better accuracy, PerSAM-F introduces a 10-second fine-tuning step that optimizes only two learnable parameters—mask fusion weights across SAM’s multi-scale outputs. The rest of SAM remains frozen, preserving its general capabilities while boosting performance on your target.

3. Video Object Segmentation Support

Personalize-SAM isn’t limited to still images. The same one-shot reference can drive consistent object tracking and segmentation across video sequences, making it valuable for content editing, surveillance, or sports analytics.

4. Lightweight Compatibility with MobileSAM

Need speed? Personalize-SAM supports MobileSAM (by swapping the SAM backbone to vit_t). This dramatically reduces memory and latency while maintaining strong personalization—ideal for mobile or edge applications.

Ideal Use Cases for Teams and Builders

Personalize-SAM shines in scenarios where speed, simplicity, and specificity matter:

- Photo Album Organization: Automatically extract a specific person, pet, or item from thousands of personal photos.

- Media & Creative Workflows: Isolate branded products in user-generated content for ad personalization or AR overlays.

- Rapid Prototyping: Test object-centric CV ideas without collecting large labeled datasets.

- Generative AI Preprocessing: Clean user-provided images for tools like DreamBooth by removing background clutter—enhancing the target object’s visual fidelity during fine-tuning. (Note: The official DreamBooth integration is listed as “coming soon” but the segmentation capability is ready today.)

Getting Started in Minutes

Using Personalize-SAM is intentionally straightforward:

- Install dependencies: Clone the repo and set up a Python environment with PyTorch and the required packages.

- Download a pre-trained SAM checkpoint (e.g.,

sam_vit_h_4b8939.pth). - Prepare your reference: One image of your target object with a clean mask (e.g., from an annotation tool or SAM itself).

- Run a single command:

- For training-free mode:

python persam.py --outdir my_dog - For 10-second tuned mode:

python persam_f.py --outdir my_dog

- For training-free mode:

- Get results: Segmentation masks and visualizations appear in

outputs/my_dog.

For videos, use persam_video.py or persam_video_f.py with your reference frame.

No distributed training. No hyperparameter tuning. Just immediate, personalized output.

Practical Limitations to Keep in Mind

While powerful, Personalize-SAM has realistic boundaries:

- Reference mask quality matters: A noisy or incomplete mask in the input image will propagate errors.

- Highly ambiguous or occluded objects (e.g., your dog behind a fence) may reduce segmentation accuracy—though PerSAM-F generally improves robustness.

- The DreamBooth integration for background-free Stable Diffusion fine-tuning is still pending release, though the core segmentation works perfectly for this purpose if implemented manually.

- PerSAM-F’s 2-parameter fine-tuning does require a GPU for the brief optimization step, though inference can run on CPU.

These are not dealbreakers—but important context for technical decision-makers evaluating fit.

Summary

Personalize-SAM delivers something rare in modern computer vision: instant, training-free customization of a foundation model using just one example. By combining smart prompting, attention steering, and minimal optional tuning, it solves the “one object, many images” problem with elegance and efficiency.

For teams building applications that require consistent, personalized segmentation—whether for consumer apps, media tools, or AI pipelines—Personalize-SAM offers a compelling blend of simplicity, speed, and performance. With open-source code, Colab notebooks, and video demos already available, it’s never been easier to test-drive personalized segmentation in your own projects.