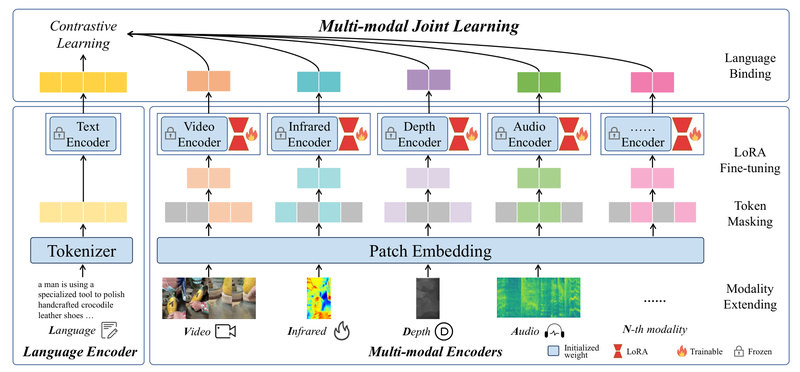

Imagine building an AI system that understands not just images and text—but also video, audio, infrared (thermal), and depth data—all…

Multimodal Representation Learning

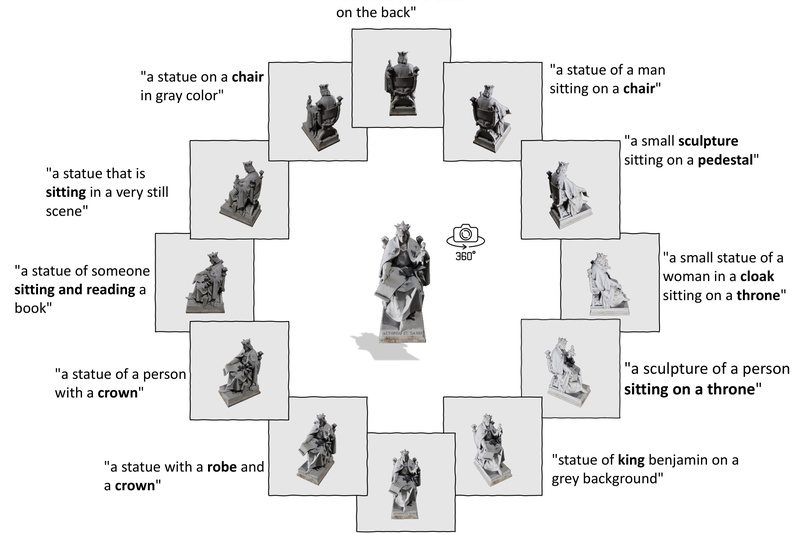

ULIP-2: Scalable Multimodal 3D Understanding Without Manual Annotations 547

Imagine building a system that can understand 3D objects as intuitively as humans do—recognizing a chair from its point cloud,…

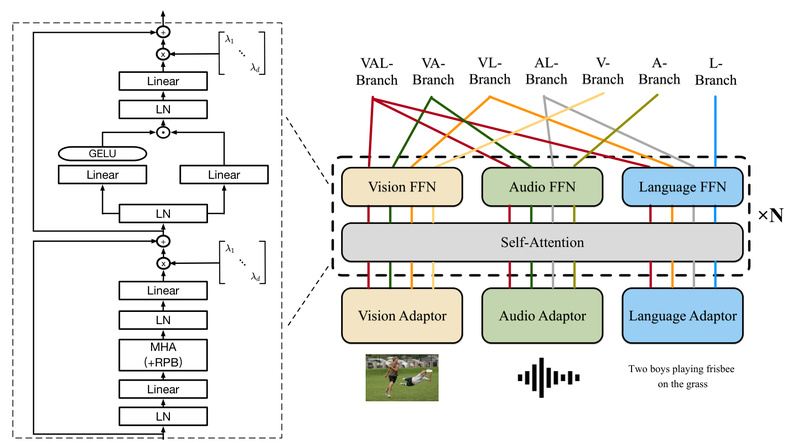

ONE-PEACE: A Single Model for Vision, Audio, and Language with Zero Pretraining Dependencies 1062

In today’s AI landscape, most multimodal systems are built by stitching together specialized models—separate vision encoders, audio processors, and language…

FlowTok: Unified Text-to-Image and Image-to-Text Generation with Compact 1D Tokens 1082

FlowTok reimagines cross-modal generation by collapsing the traditionally complex boundary between text and images into a streamlined, efficient process. Unlike…